Configure an Azure Data Connection

LiveRamp Clean Room’s application layer enables companies to securely connect distributed datasets with full control and flexibility while protecting the privacy of consumers and the rights of data owners.

To configure an Azure data connection, see the instructions below.

Overall Steps

Perform the following overall steps to configure an Azure data connection in LiveRamp Clean Room:

For information on performing these steps, see the sections below.

Prerequisites

The following information is needed to configure your Azure data connection in LiveRamp Clean Room:

Azure Storage Account Name

Container Name

Path to the blob directory where your data files are stored

(optional) Determine partition columns for use in optimizing question performance

Guidelines

Review the following guidelines before starting the setup process:

If data is at the container level and there is no blob directory under the container, the path to the blob directory should be empty. If the raw data files are under a blob directory, the blob path should point to top level directory where all the data files reside. There should not be a mix of data files and sub-directories.

LiveRamp Clean Room supports CSV and Parquet files, as well as multi-part files. All files should have a file extension. All CSV files must have a header in the first row. Headers should not have any spaces or special characters and should not exceed 50 characters. An underscore can be used in place of a space.

The folder where the data files are dropped should include hive-style date formatting: "date=yyyy-MM-dd".

Generate an Azure SAS Token

The Azure SAS Token can be generated in the Microsoft Azure UI at either the container or account level. Each follows a slightly different process outlined below.

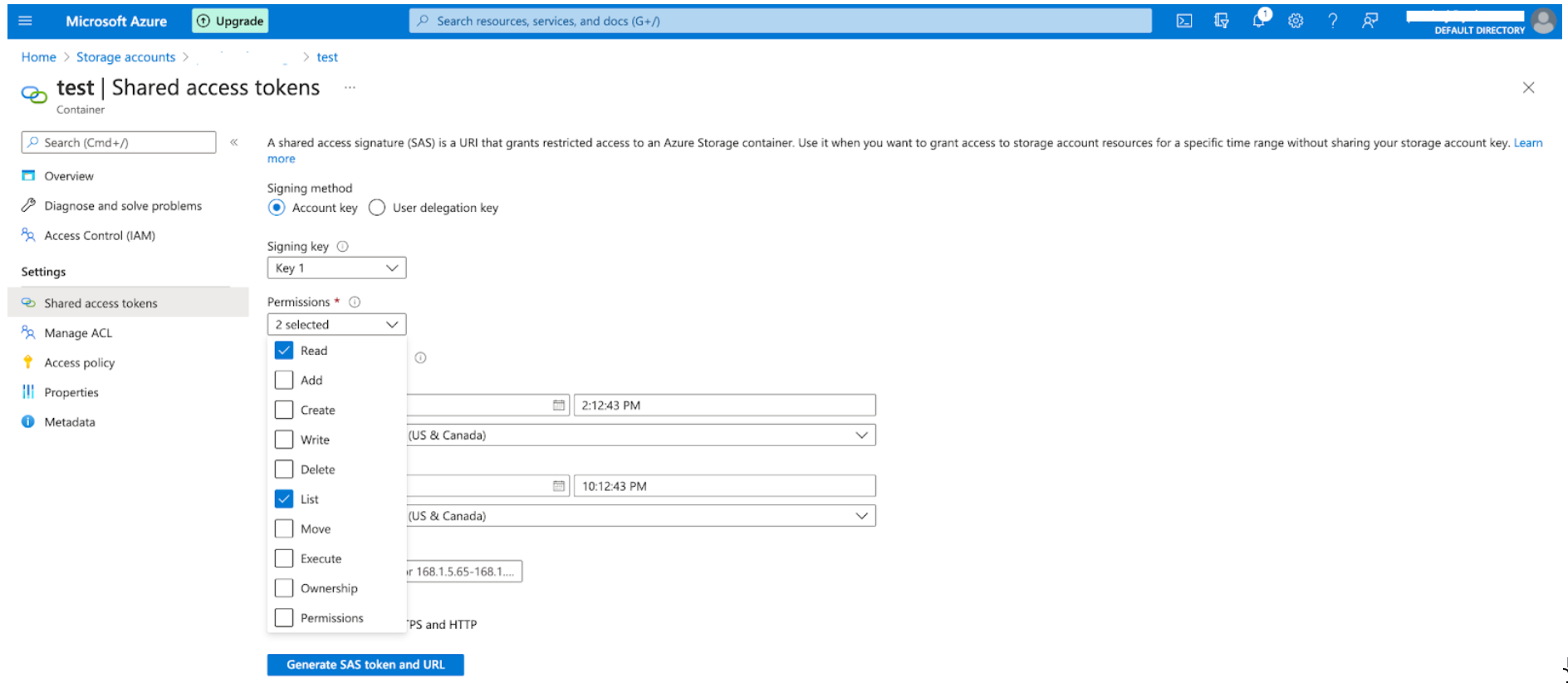

Generate an SAS token at the container level:

Navigate to the storage account, select the container, and then select "Shared access tokens" under "Settings" in the left navigation pane.

Under the Permissions dropdown, check the check boxes for "Read" and "List" (both permission levels are required).

Specify the expiration of the token.

Note

The SAS token must be created as a long-lived token to prevent it from expiring.

Click .

Record the Blob SAS token generated.

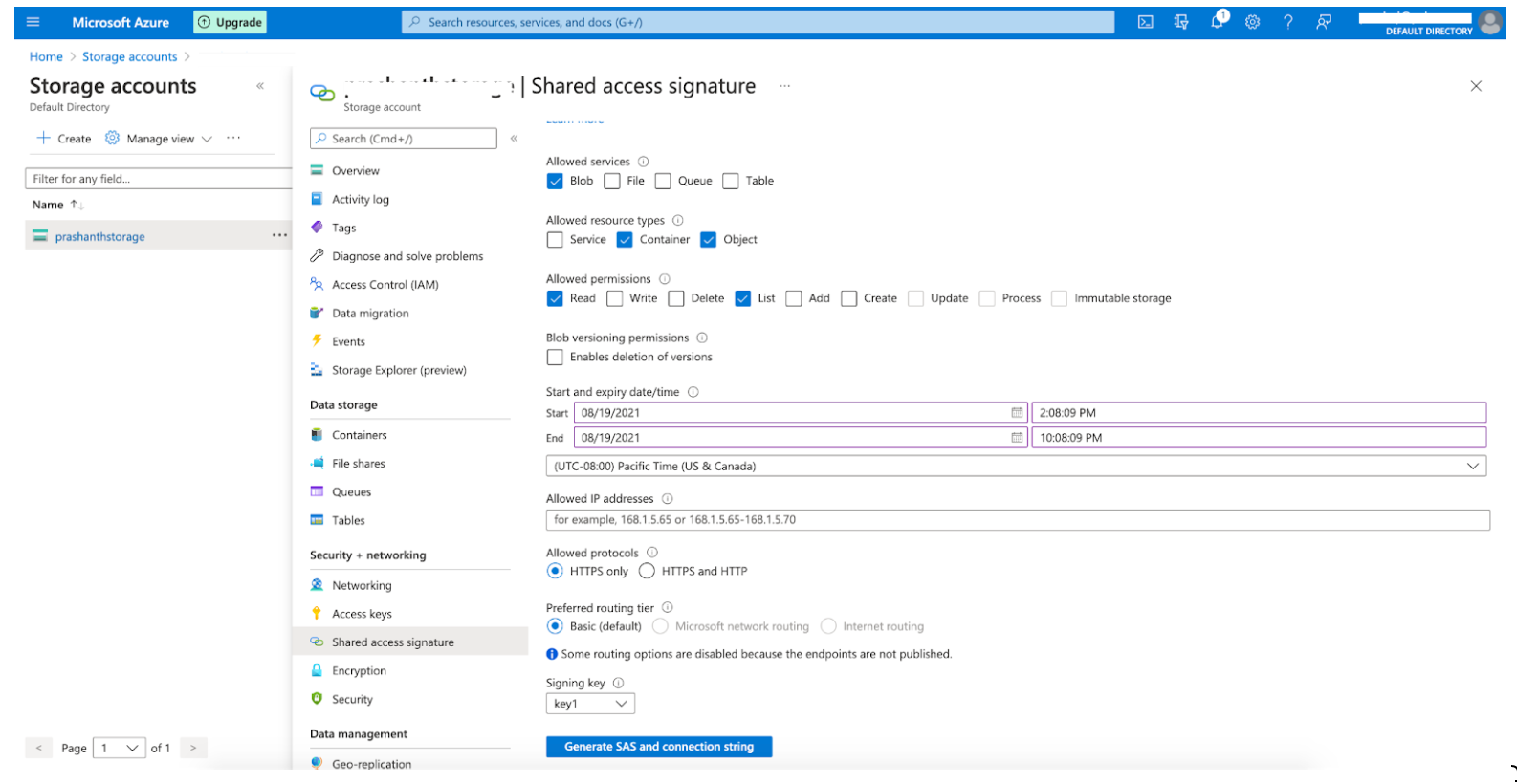

Generate an SAS token at the account level:

Navigate to the storage account, select the container, and then select "Shared access signature" under "Security + Networking" in the left navigation pane.

The shared access signature screen displays.

Under "Allowed Services", check the check box for "Blob".

Under "Allowed resource types", check the check boxes for "Container" and "Object" (both resource types are required).

Under "Allowed permissions", check the check boxes for "Read" and "List" (both permission levels are required).

Click .

Record the SAS token generated.

Add the Credentials

To add credentials:

Note

Credentials can also be configured when creating the data connection.

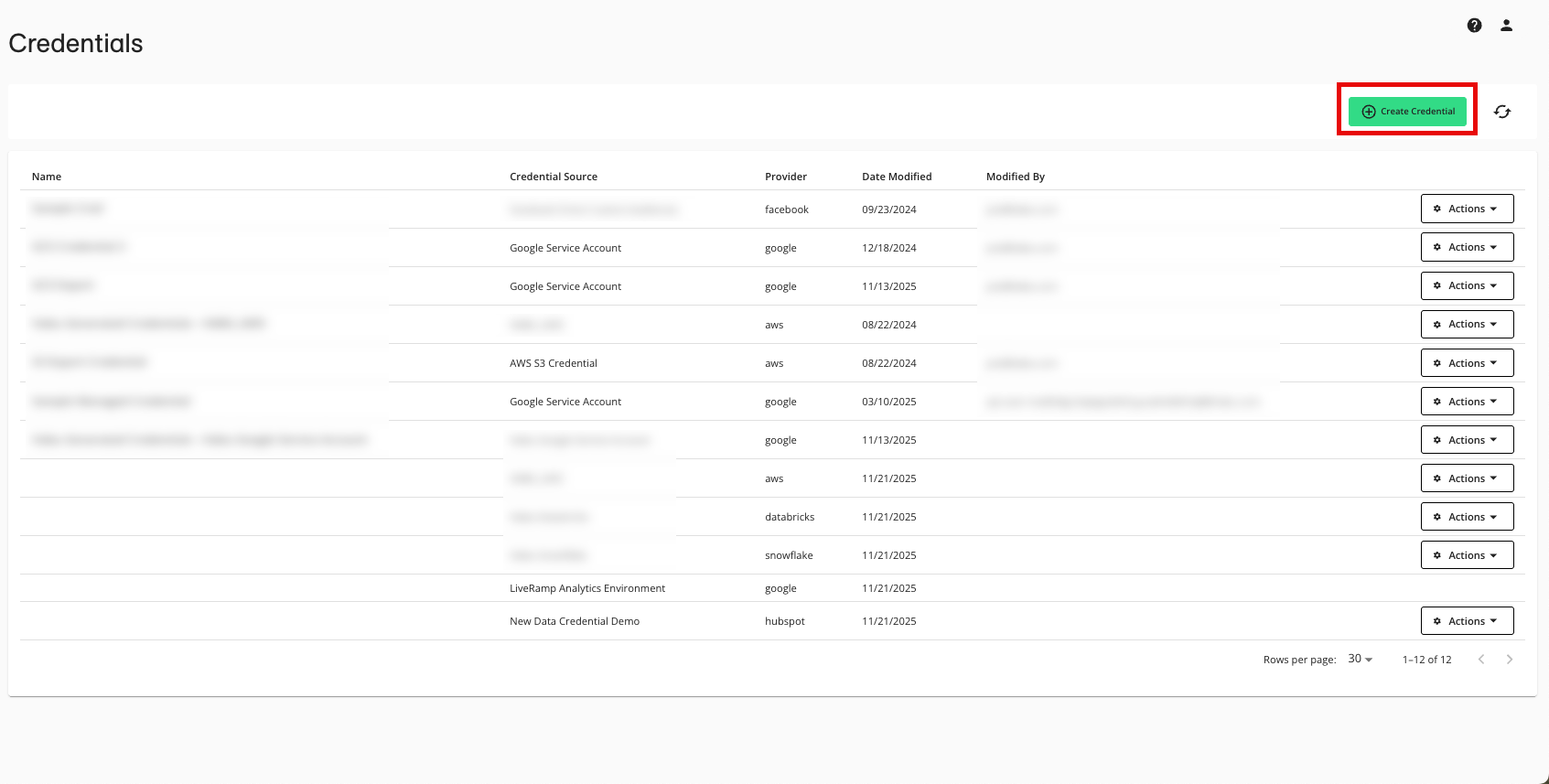

From the LiveRamp Clean Room navigation pane, select Data Management → Credentials.

Click .

Enter a descriptive name for the credential.

For the Credentials Type, select "Azure SAS Token".

Enter your SAS token generated in the previous procedure.

Click .

Create the Data Connection

To create the data connection:

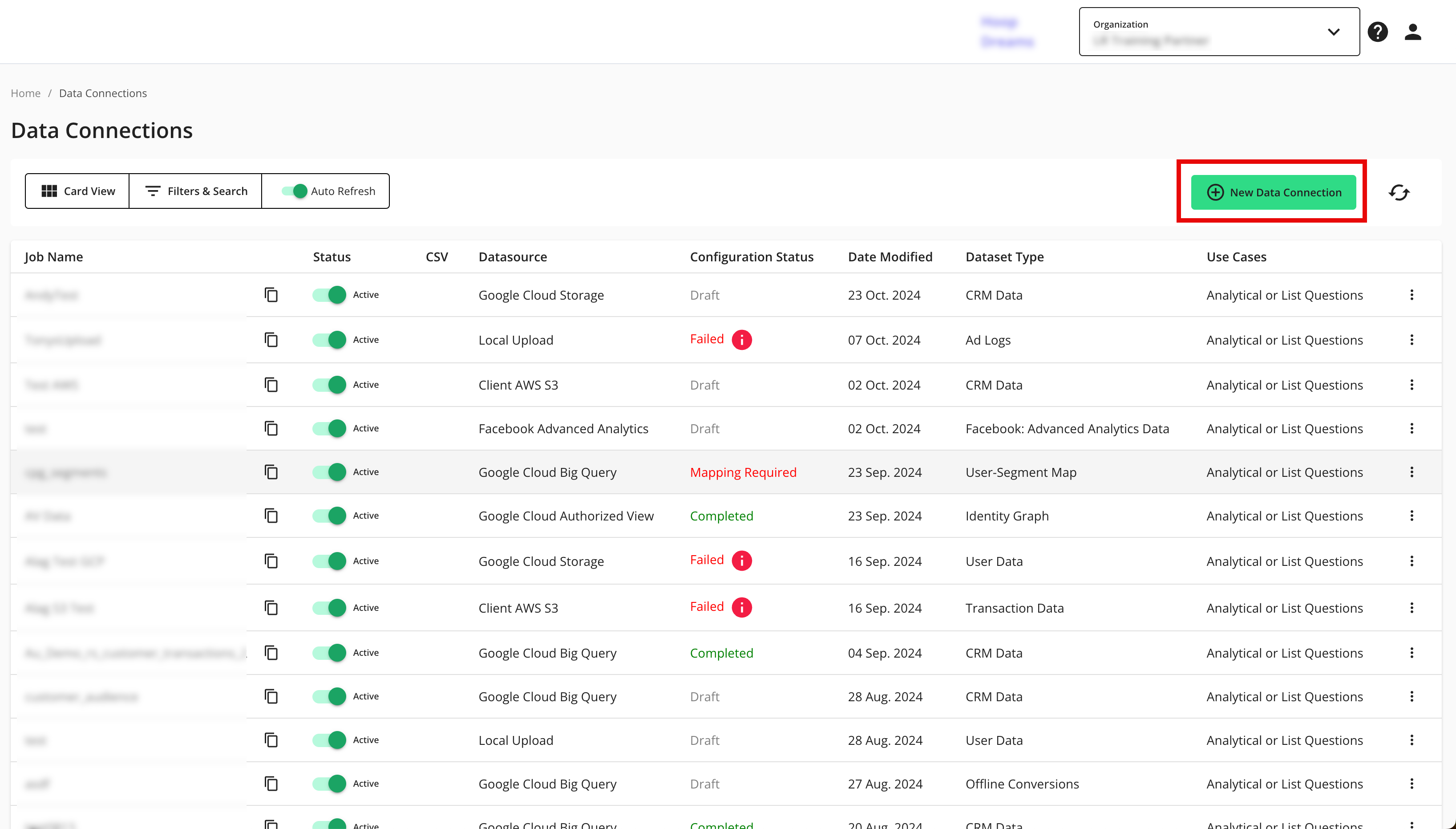

From the LiveRamp Clean Room navigation pane, select Data Management → Data Connections.

From the Data Connections page, click .

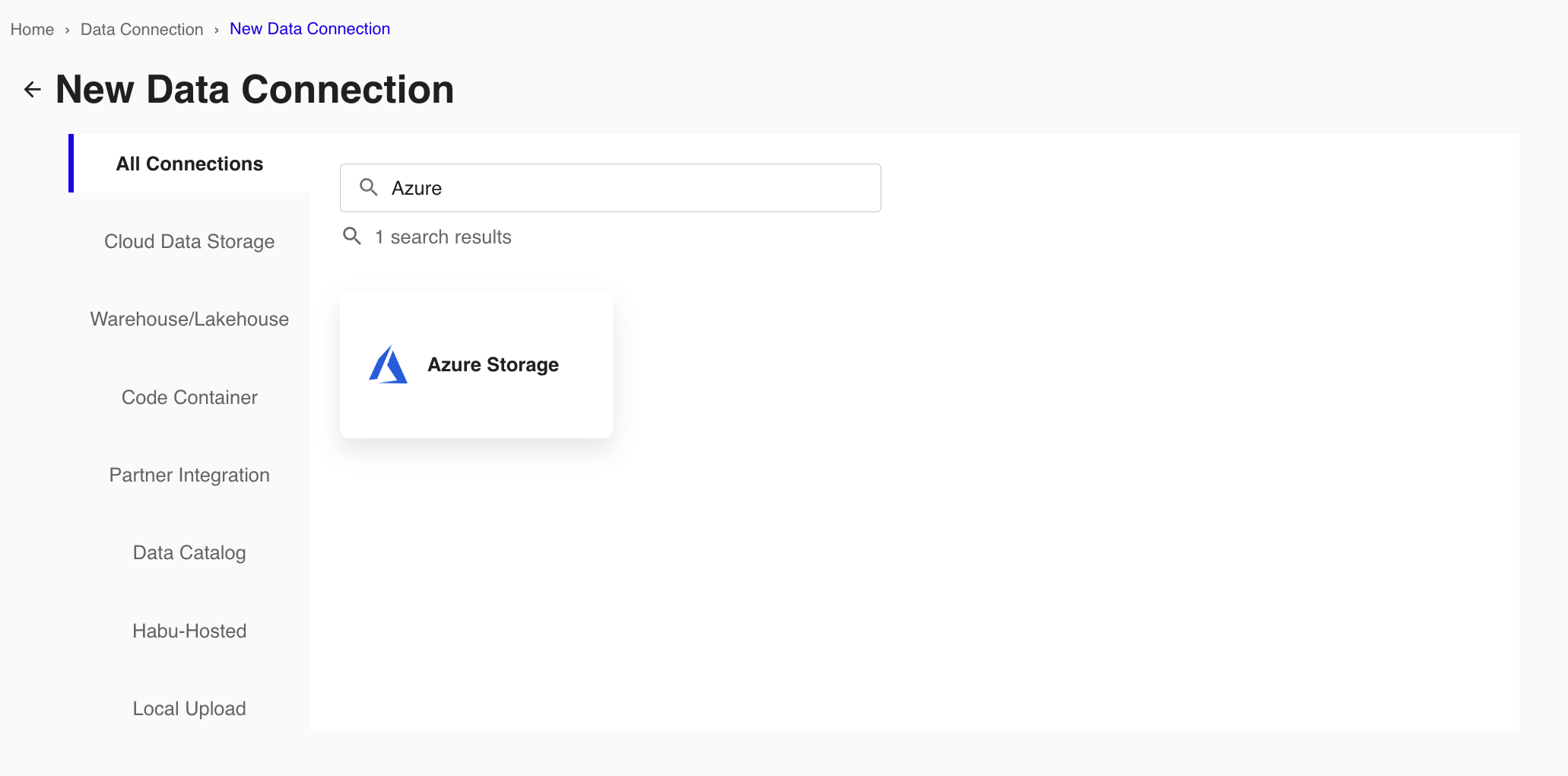

From the New Data Connection screen, select "Azure Storage".

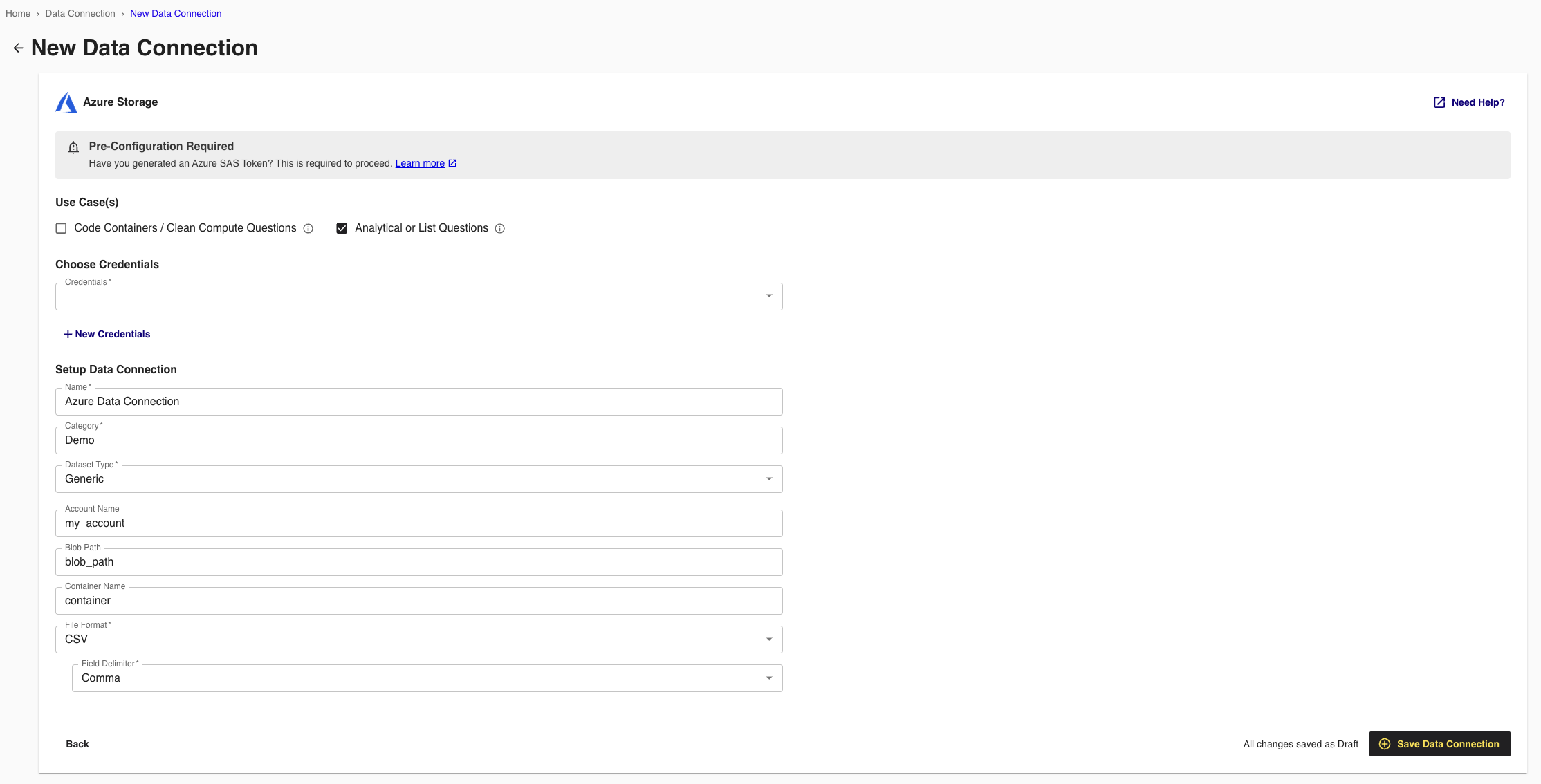

From the New Data Connections screen, select the credentials you created in the previous procedure from the dropdown or create new credentials as necessary.

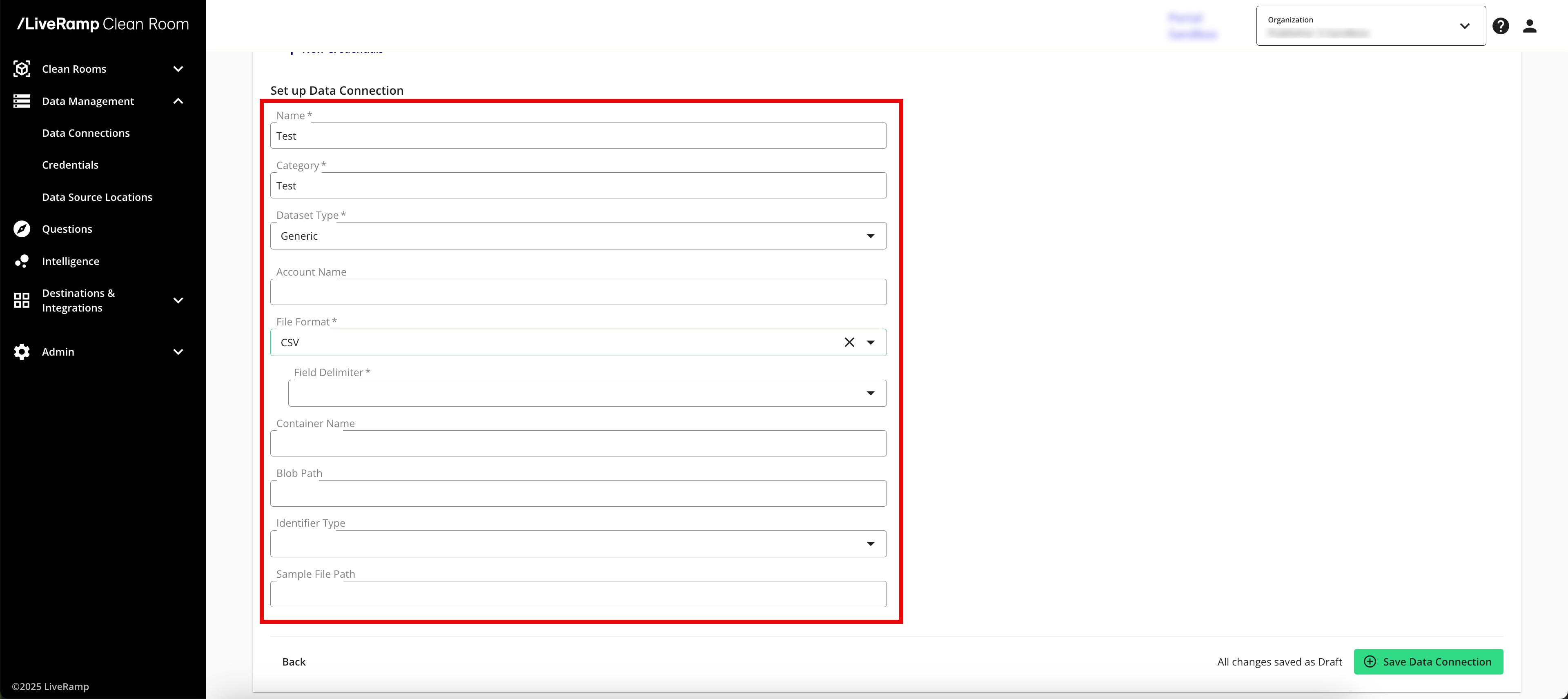

Configure the data connection:

Name: Enter a name of your choice.

Category: Enter a category of your choice.

Dataset Type: Select Generic.

Account Name: Enter your Azure Blob account name.

File Format: Select CSV, Parquet, or Delta.

Note

All files must have a header in the first row. Headers should not have any spaces or special characters and should not exceed 50 characters. An underscore can be used in place of a space.

If you are uploading a CSV file, avoid double quotes in your data (such as "First Name" or "Country").

Field Delimiter: If you're uploading CSV files, select the delimiter to use (comma, semicolon, pipe, or tab).

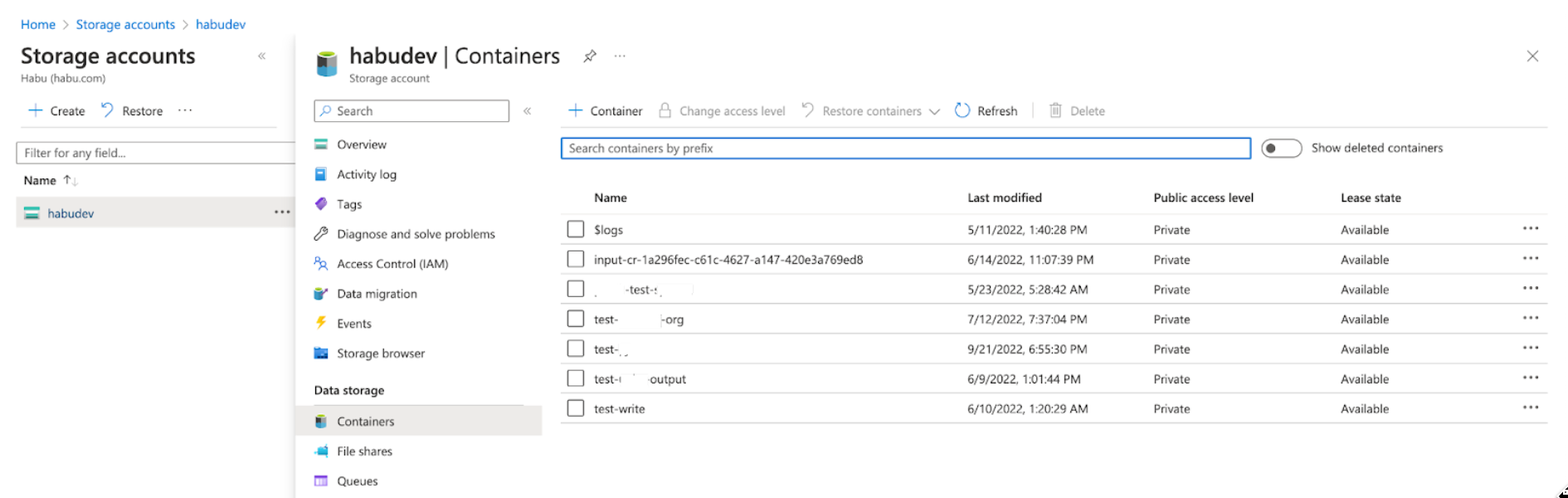

Container Name: Enter the container name found in your storage account, under Data Storage > Containers.

Blob Path: Enter the path to the folder containing the data files. For example, using the sample path "http://account-name.blob.core.windows.net/container-name/blob-path", the Blob Path follows the container name.

Note

If the data files are at the container level, the Blob Path field should be left empty.

Do not include a forward slash at the end of the Blob Path.

Do not include the raw data file name.

Job Frequency: Select the frequency with which the data at that path is updated (Daily, Weekly, Monthly, or On-Demand).

Sample File Path: Optionally used if defining partition columns for optimizing question performance.

Ex: blob_path/brand-id=1234/file.csv

Review the data connection details and click .

Note

All configured data connections can be seen on the Data Connections page.

Upload your data files to your specified location.

When a connection is initially configured, it will show "Verifying Access" as the configuration status. Once the connection is confirmed and the status has changed to "Mapping Required", map the table's fields.

You will receive file processing notifications via email.

Map the Fields

Once the connection is confirmed and the status has changed to "Mapping Required", map the table's fields and add metadata:

From the row for the newly-created data connection, click the More Options menu (the three dots) and then click .

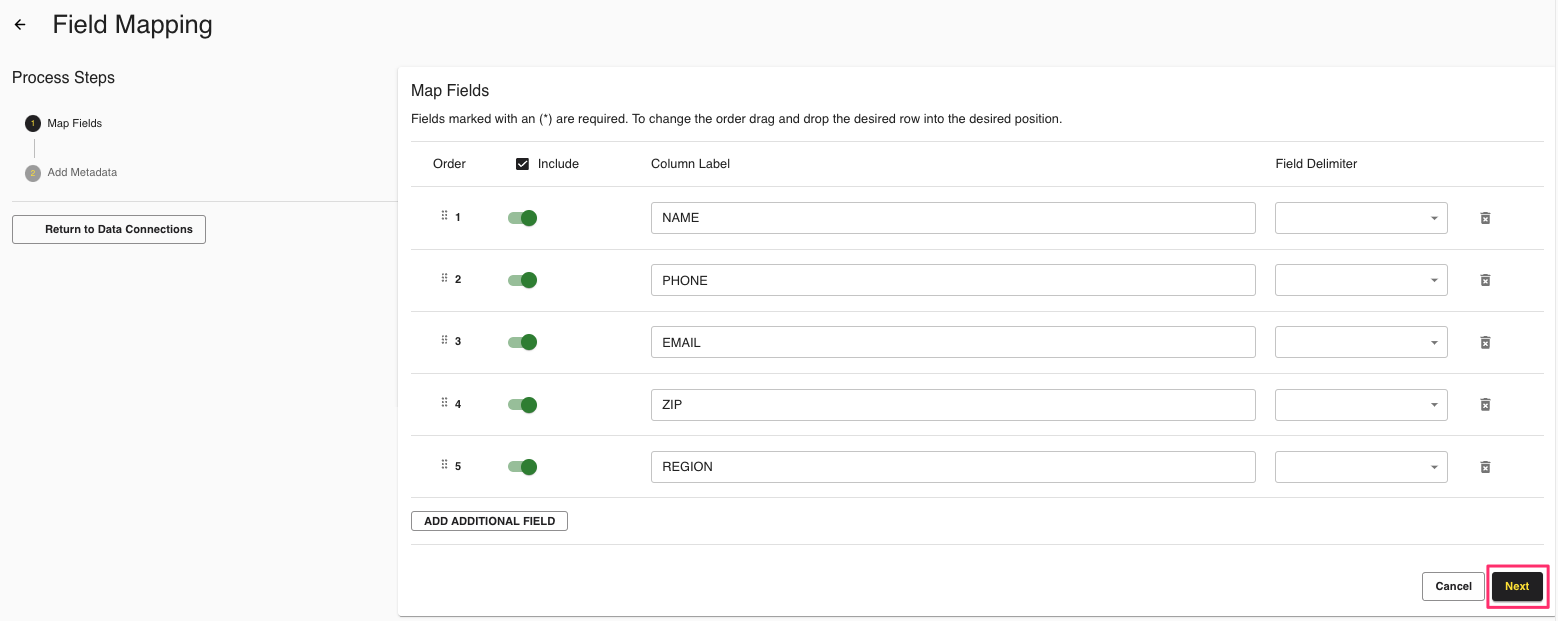

The Map Fields screen opens and the file column names auto-populate.

For any columns that you do not want to be queryable, slide the Include toggle to the left.

If needed, update any column labels.

Note

Ignore the field delimiter fields because this was defined in a previous step.

Click .

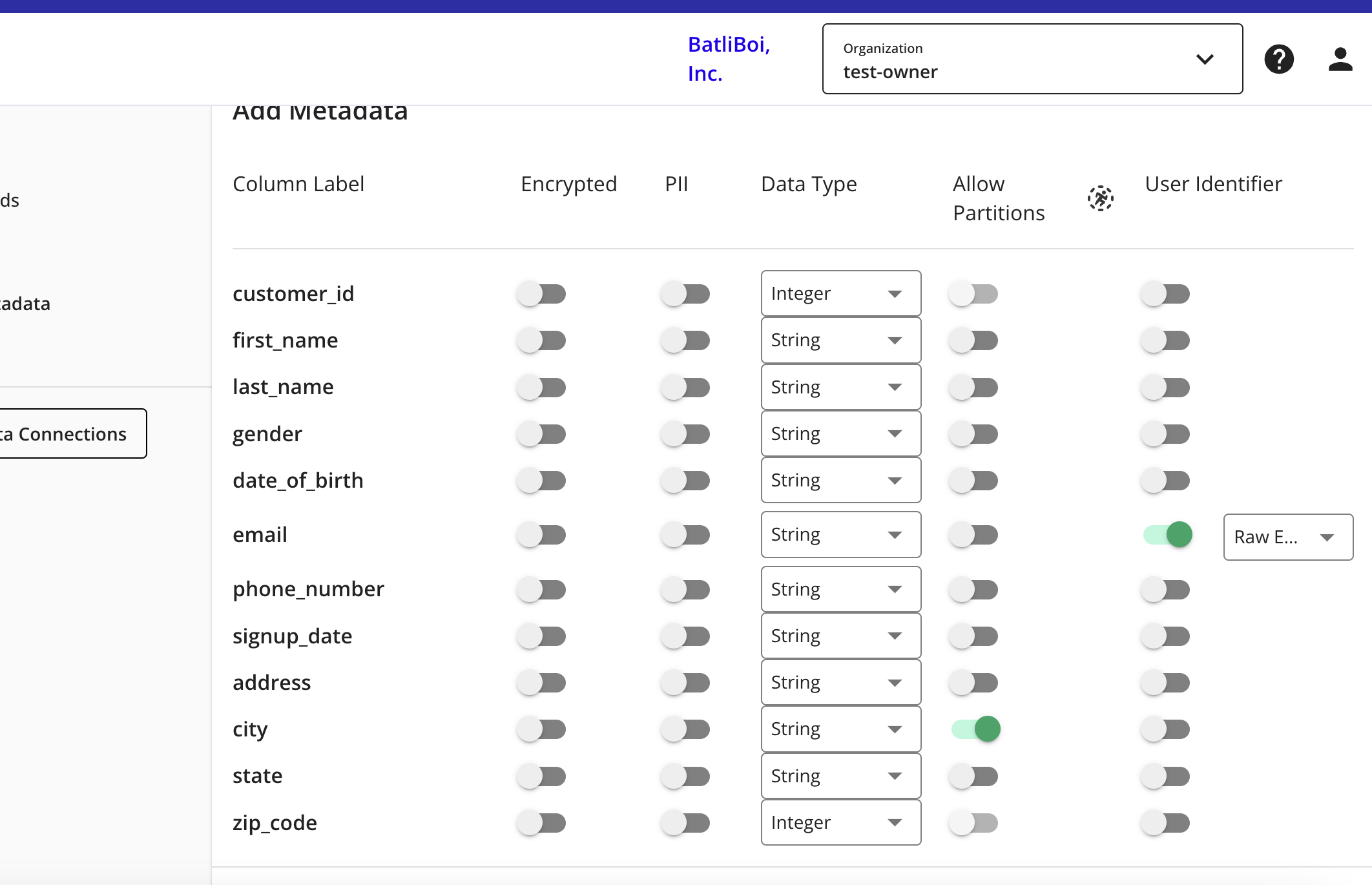

The Add Metadata screen opens.

For any column that contains PII data, slide the PII toggle to the right.

Select the data type for each column.

For any partition columns, slide the Allow Partitions toggle to the right.

If a column contains PII, slide the User Identifiers toggle to the right and then select the user identifier that defines the PII data.

Click .

Your data connection configuration is now complete and the status changes to "Completed".