Privacy-Preserving Techniques and Clean Room Results

LiveRamp Clean Room implements security and privacy by design and leverages an array of techniques for privacy preservation, empowering clean room data owners and their partners to build a secure, privacy-compliant solution for data collaboration.

It is critical to protect consumer privacy and the rights of data owners at every stage of data collaboration. This topic addresses privacy-preserving techniques available for use in LiveRamp clean rooms. Results data consist of the approved, privacy-preserving output of collaboration that conforms to the mutual requirements of data owners.

LiveRamp Clean Room uses privacy-preserving techniques that are configurable depending on the type of analysis and sensitivity of the dataset. These approaches include k-minimization on output, k-minimization on input as a Dataset Analysis Rule, the application of random noise, and custom differential privacy settings (limited availability).

K-min Results Output Enforcement

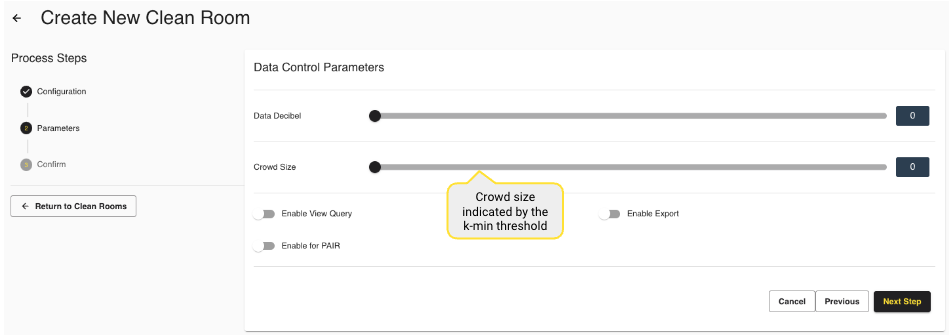

LiveRamp Clean Room can apply a customer-specified k-min threshold (referred to as a "Crowd Size" data control parameter) to every user metric that is reported in all queries executed within clean rooms. This threshold is set when creating the clean room.

For example, consider the following query. If the crowd size (COUNT) were 100, then the last row in the result set will not be displayed to the user because it does not meet the minimum threshold on output. This is enforced if the query author has applied the k-min checkbox to the measure, so it is important to ensure the k-min enforcement is applied correctly prior to opting datasets in to a given question if relying on the k-min threshold for output privacy protections.

SELECT usm.audience_segment, COUNT(*) AS impressions FROM adlog_impressions ali, user_segment_map usm WHERE ali.user_id = usm.user_id GROUP BY 1 ORDER BY 2 DESC;

AUDIENCE_SEGMENT | IMPRESSIONS |

|---|---|

High Household Income | 184795 |

Owns RV | 99264 |

... | ... |

Owns Stocks & Bonds | 21166 |

Casino Gambler | 20980 |

Children in Household | 88 => NOT RETURNED/DISPLAYED |

Aggregation Threshold Dataset Analysis Rule

Your organization may wish to enforce k-min on input rather than on output results to ensure a specified number of identifiers are included in every aggregation performed in the clean room. LiveRamp enables this enforcement via the Aggregation Threshold dataset analysis rule.

Dataset analysis rules can be set on a specific dataset once that dataset has been provisioned to a clean room. To learn more about dataset analysis rules, see "Set Dataset Analysis Rules".

Noise Injection Using Laplacian Noise

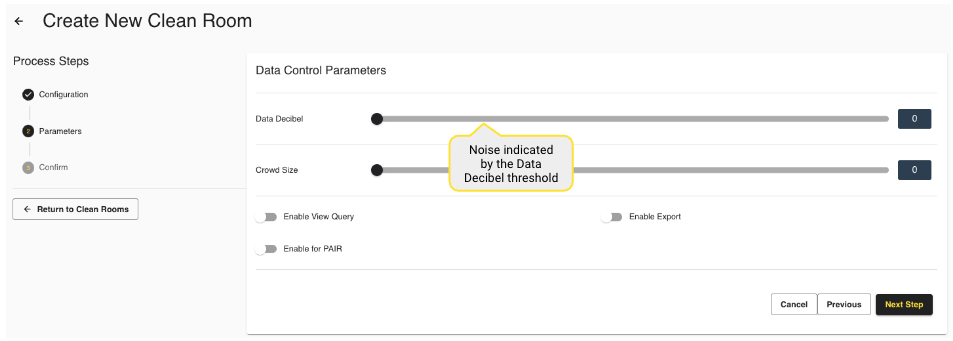

In addition to k-min enforcement on output, noise injection (referred to as a "Data Decibel" data control parameter) lets you apply a randomized metric data perturbation technique by adding Laplacian noise to alter the metrics shown to the user. This prevents leakage of sensitive information if a user tries to run repeated queries that would return similar results with a known difference that could be exploited. You can specify the amount of noise using the Data Decibel parameter when creating the clean room.

The noise injection is based on random noise drawn from a Laplace distribution and is applied on output if the Noise checkbox is selected by the query author. If relying on noise as a privacy-preservation mechanism, it is important to ensure the correct checkbox is selected on the appropriate measures before opting datasets into a question.

This methodology is implemented as a Python user-defined function (UDF) appended to clean room queries. Question templates allow clean room owners to specify which metrics should be treated with random noise.

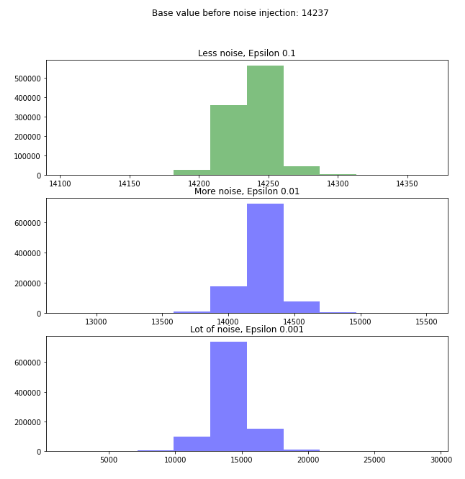

The noise value is adjusted based on where the Data Decibel slider is set. A higher data decibel yields a value closer to 0 (more noise) and a lower decibel yields a value closer to 1 (less noise).

The following example scenario shows a base value before noise injection of 14,237. Its decibel values change depending on the amount of noise applied using the slider: Low (1), Medium (50), and High (99). Low and medium noise do not result in much variation, whereas high noise significantly changes the values.

Metric | Low Noise | Medium Noise | High Noise |

|---|---|---|---|

count | 1,000,000.00 | 1,000,000.00 | 1,000,000.00 |

mean | 14,237.01 | 14,236.99 | 14,237.59 |

std | 14.15 | 141.36 | 1.415.43 |

min | 14,103.00 | 12758.00 | 1,648.00 |

25% | 14,230.00 | 14,168.00 | 13,544.00 |

50% | 14,237.00 | 14,237.00 | 14,237.00 |

75% | 14,244.00 | 14,306.00 | 14,931.00 |

max | 14,366.00 | 15,523.00 | 29,134.00 |

|

Differential Privacy (Limited Availability)

While randomized noise injection is likely sufficient protection for most use cases, it sometimes muddies the utility of query results or is not considered sufficient for the protections an organization requires for its datasets. In these cases, you might wish to consider an advanced privacy-preserving technique: differential privacy.

Differential privacy is the application of noise to query results on output calibrated to ensure nothing is learned about a subject included in analysis that was not already known prior to the analysis taking place. In other words, the results are altered just enough to ensure that the analyst cannot learn private information about a single individual they wouldn't otherwise have known. LiveRamp can enable various differential privacy settings as required for more advanced use cases and is happy to work with your team to determine the best path for your organization.

Considerations

When applying k-min enforcement and noise injection, consider the following:

You should carefully consider the balance between privacy protection and query utility so that results are not compromised by stricter privacy treatments.

Rerunning a query for the same date range will produce different results. Therefore, you must account for the expected volume of runs.

You cannot configure noise injection for metrics that require a sensitivity value higher than 1.