Export Analytical Results to BigQuery

You can set up an export of analytics results to BigQuery for the following clean room types:

Snowflake

Hybrid

Confidential Computing

Walled Garden (ADH, AMC, and FAA)

Note

List question results cannot be exported to BigQuery.

Make sure that the bucket path you use for the export destination is distinct from any bucket paths you use for existing BigQuery data connections in LiveRamp Clean Room. For example, to use the same bucket for both exports and data connections, make sure to use a distinct folder in that bucket for exports and a distinct folder for each data connection.

Partners invited to a clean room must have their export destination connections (grouped under "Destinations") approved by clean room owners. Contact your Customer Success representative to facilitate the approval.

The IAM role from LiveRamp Clean Room needs to have write/delete and read access on the customer bucket/folder.

Configuring exports outside of a clean room (such as at the organization level) is still supported, but will be deprecated. Setting up clean room question exports within a clean room is recommended.

Overall Steps

Perform the following overall steps to set up an export of analytics results to BigQuery:

For information on performing these steps, see the sections below.

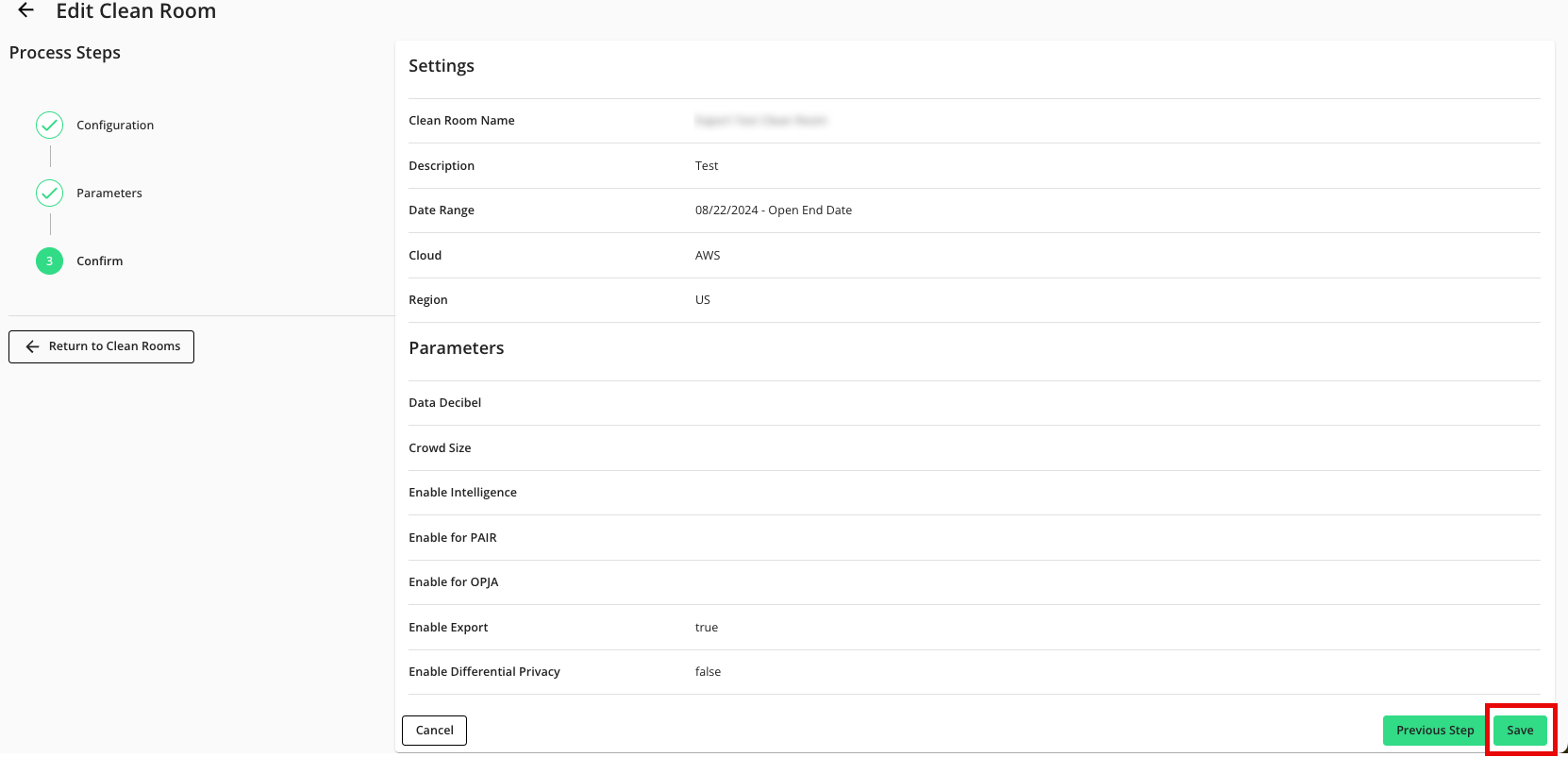

Enable the Clean Room for Exports

Before setting up an export, the clean room owner must enable exports for the selected source clean room (if not already done during clean room creation):

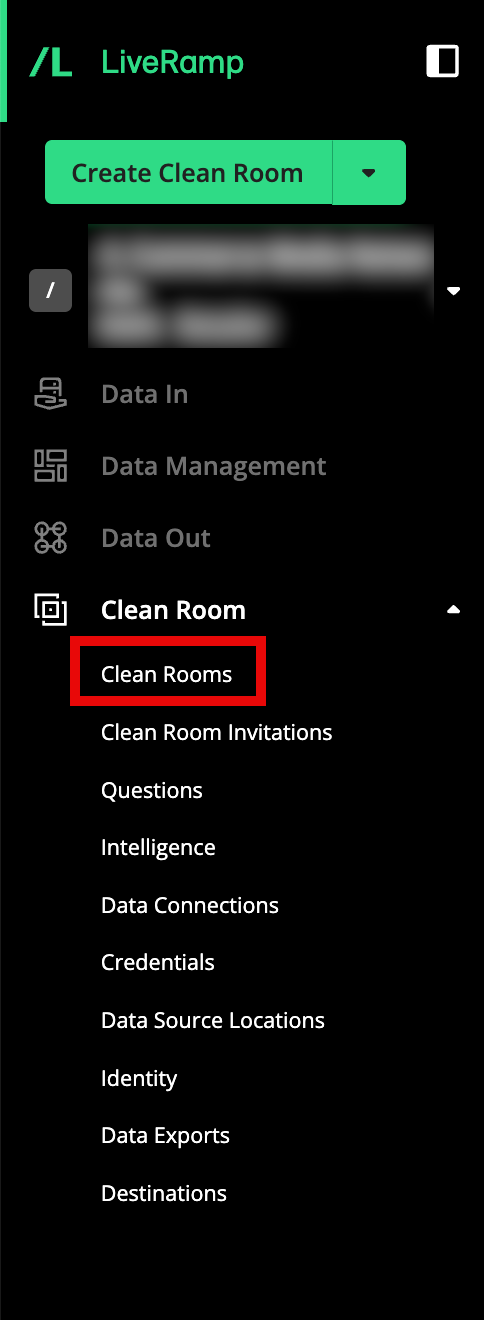

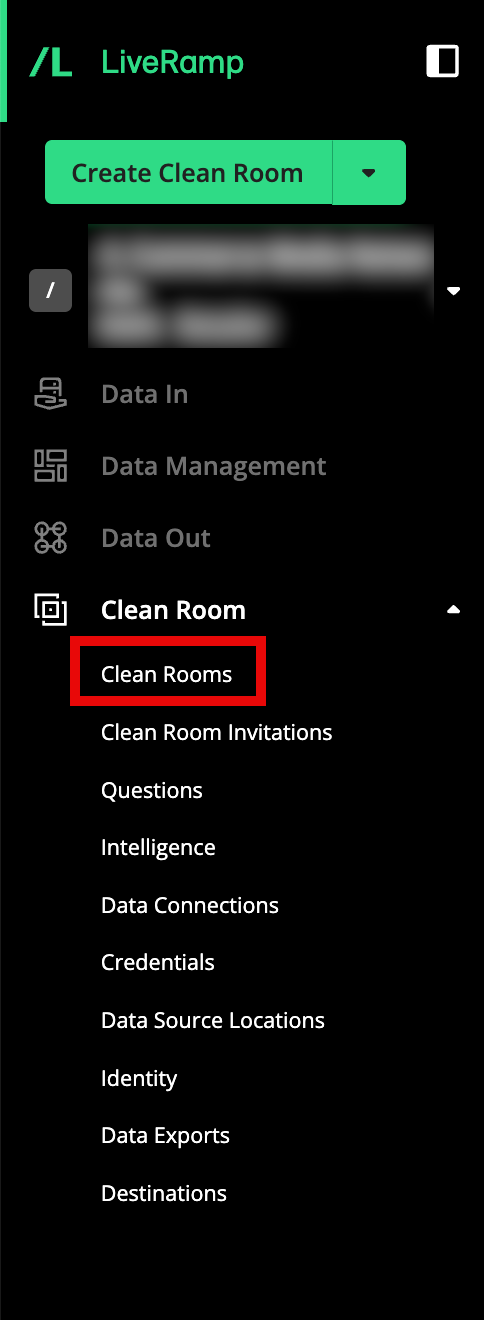

From the navigation menu, select Clean Room → Clean Rooms to open the Clean Rooms page.

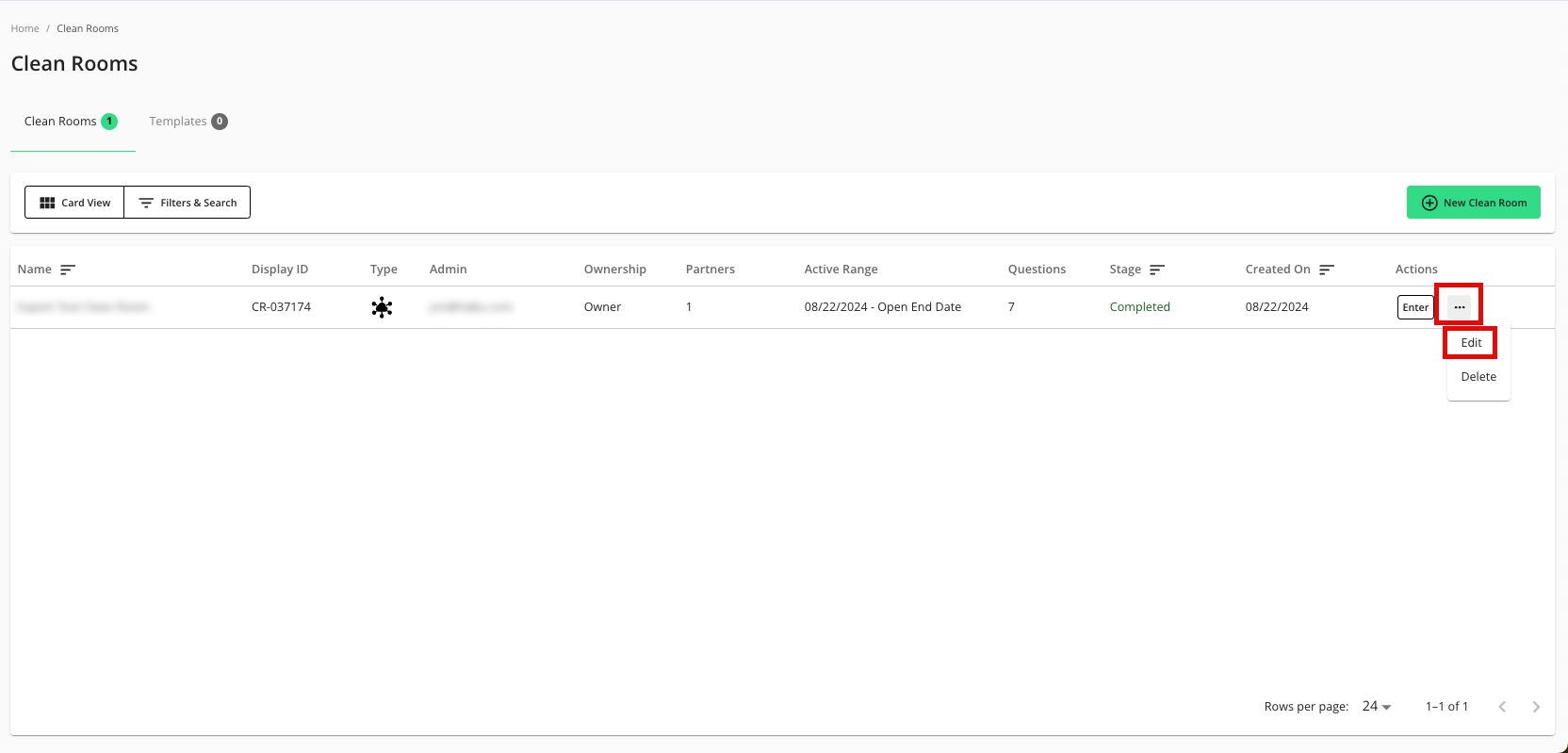

In the row for the clean room you would like to export from, click the More Options menu (the three dots), and then select Edit.

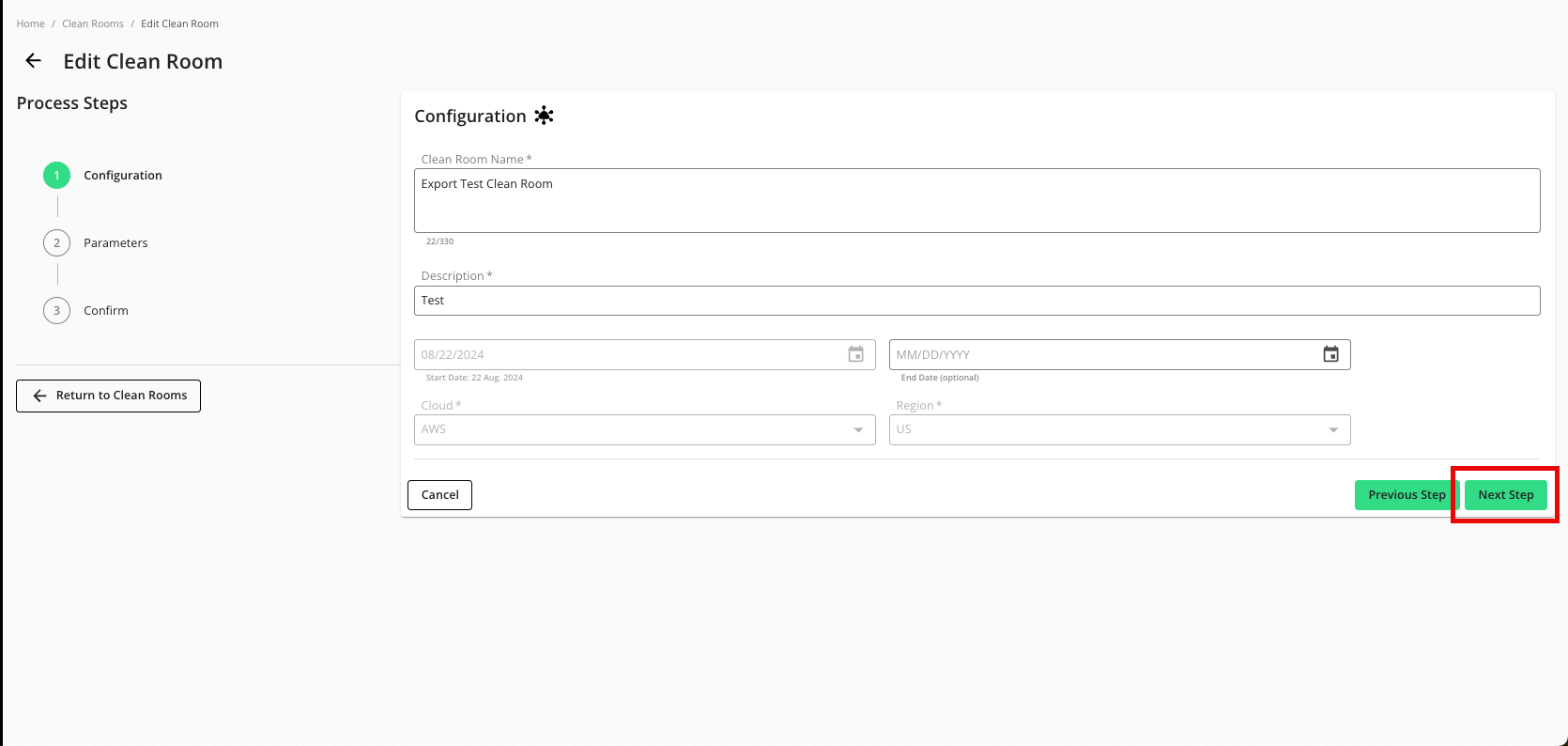

From the Configuration step, click .

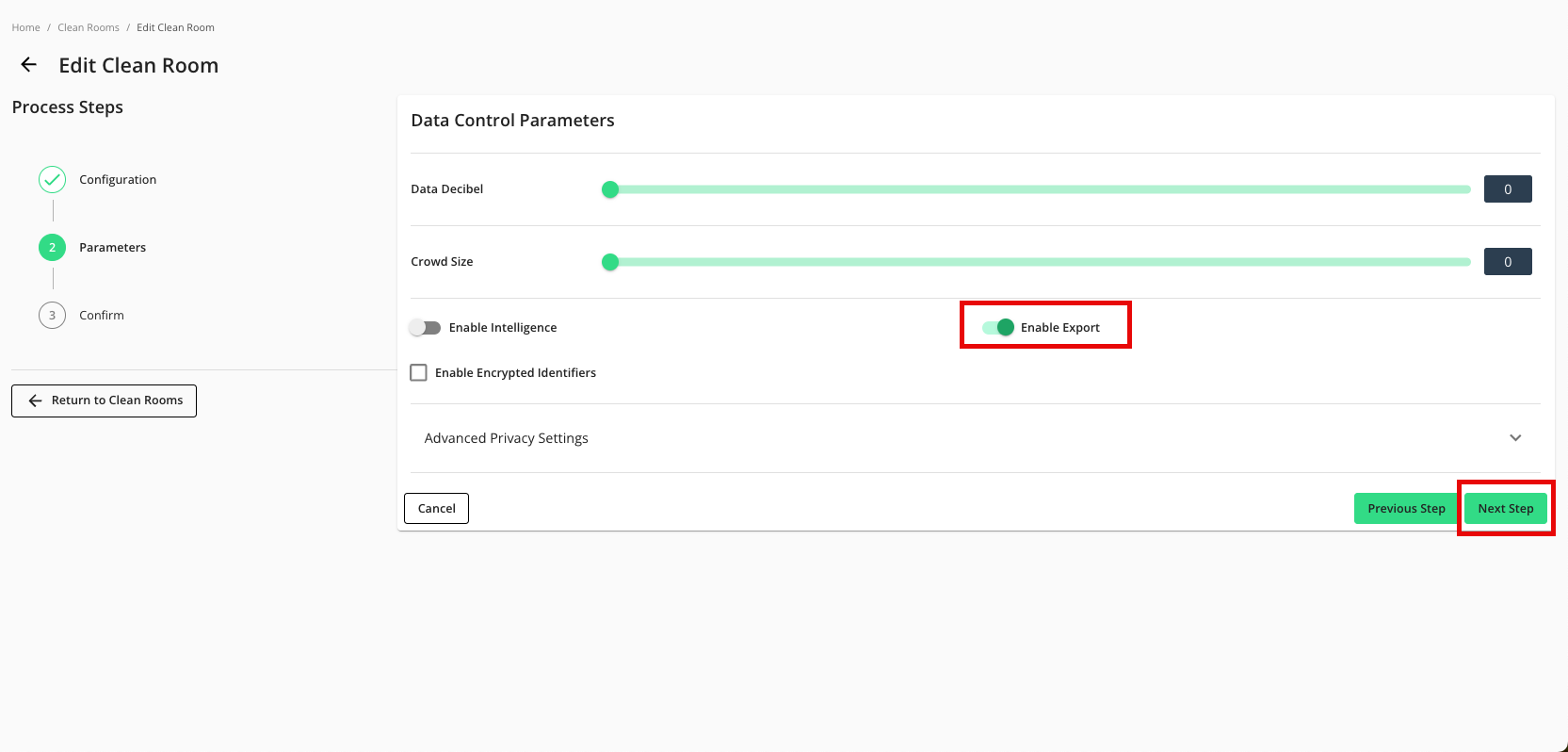

From the Parameters step, adjust any data control parameters as needed and then slide the Enable Export toggle to the right.

Click .

Verify that your data control parameters are correct and then click .

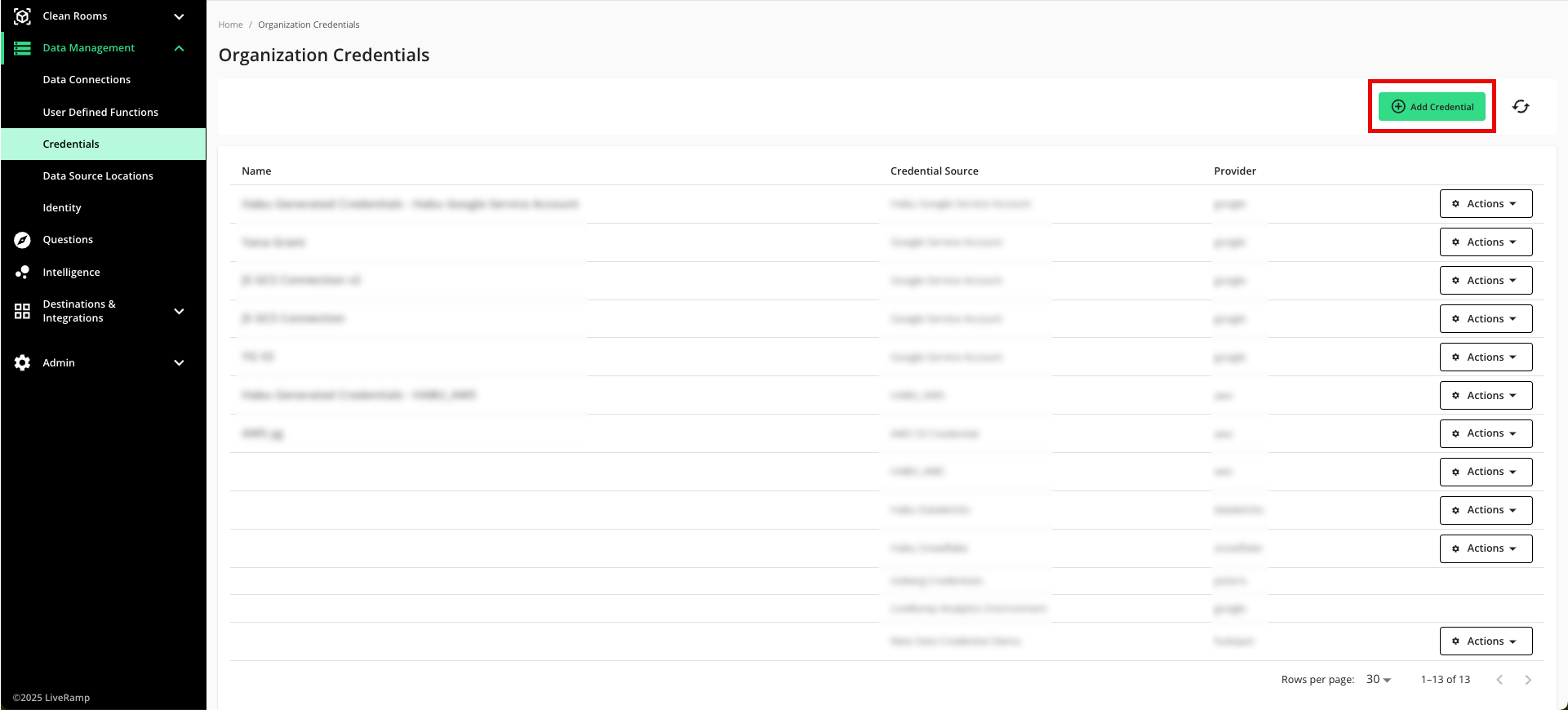

Add the Credentials

After enabling the clean room for exports, the clean room owner must first add either their own credentials or those of their partner:

From the navigation menu, select Clean Room → Credentials to open the Credentials page.

Click .

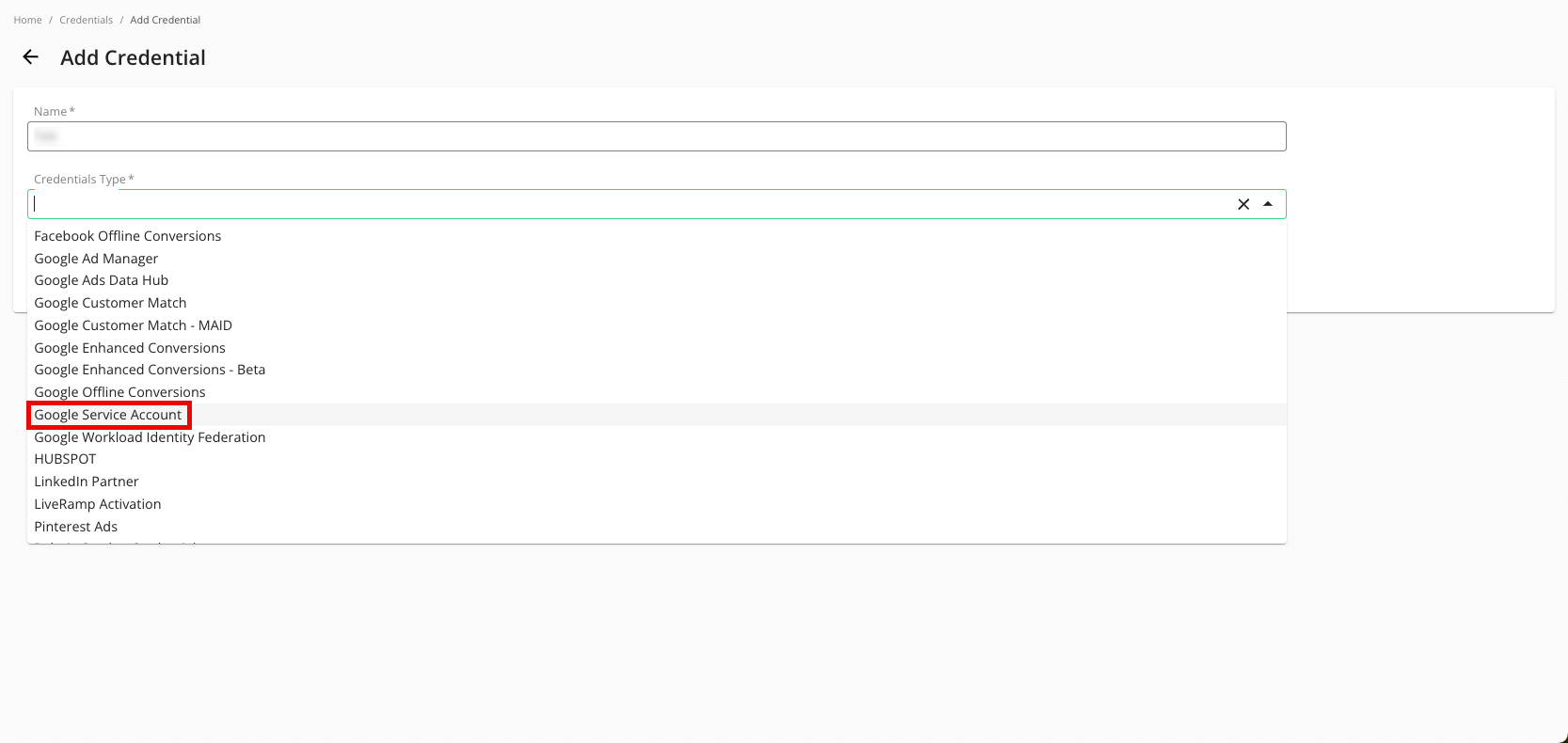

Enter a descriptive name for the credential.

For the Credentials Type, select "Google Service Account".

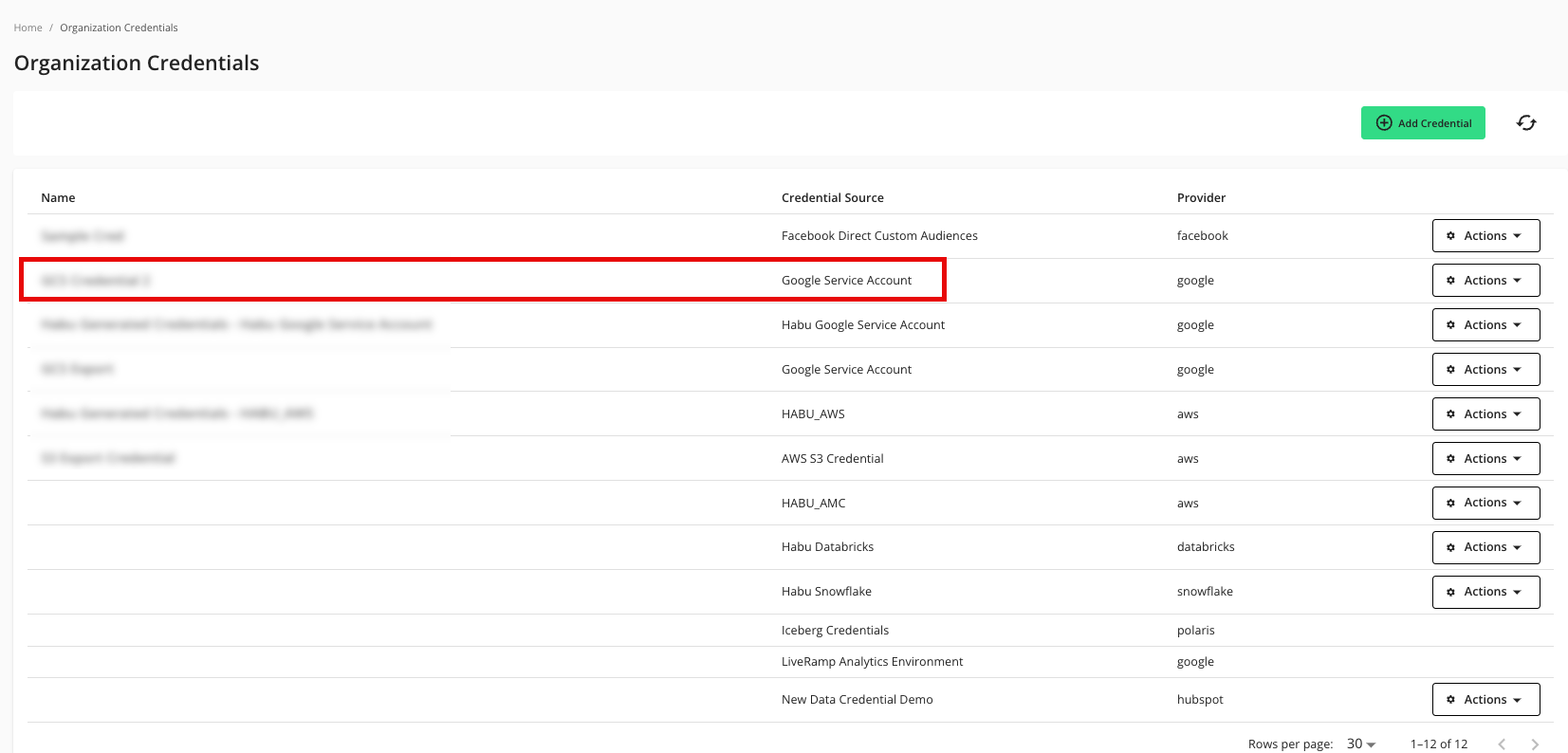

For the Project ID, enter the project ID.

Enter your Credential JSON (credential JSON is hidden by default, viewable here for demonstration purposes).

Click .

Verify that your credentials have been added to LiveRamp Clean Room:

Add an Export Destination Connection

To create an export destination connection:

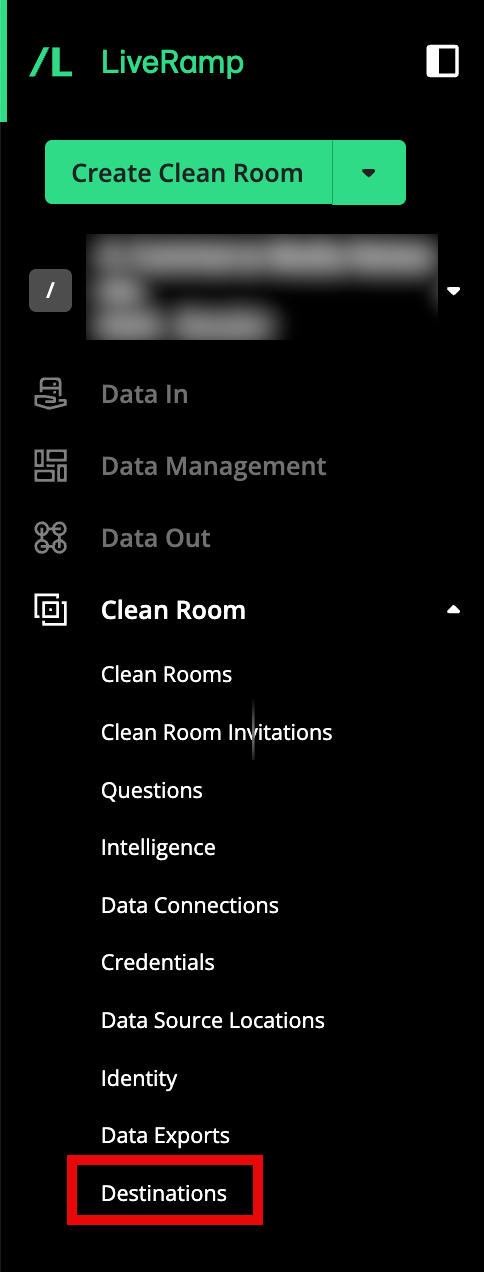

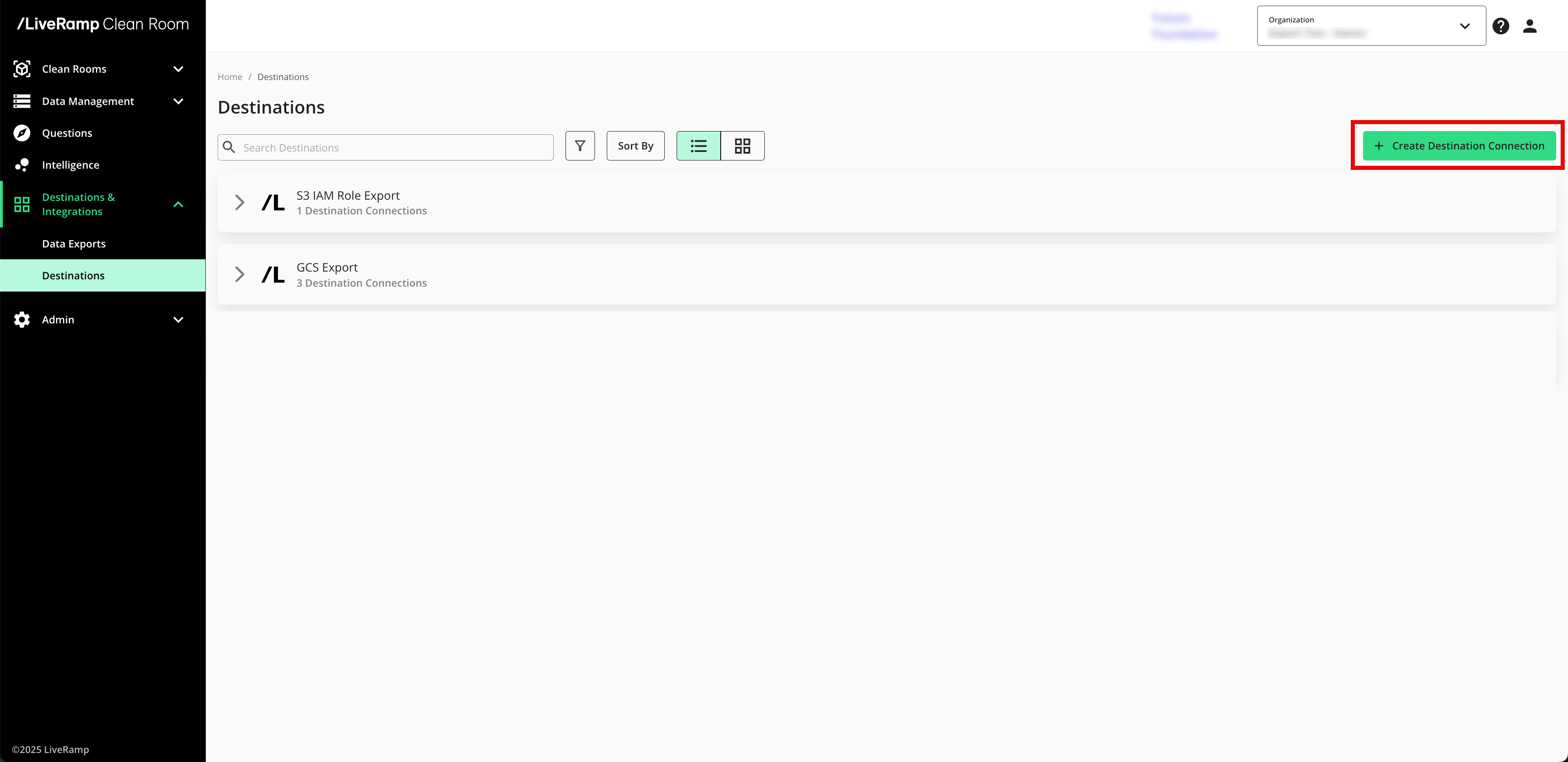

From the navigation menu, select Clean Room → Destinations to open the Destinations page.

Click .

Select BigQuery Export.

Enter a name and select the BigQuery Credential created in the "Add the Credentials" section above.

Click .

Confirm that the new export has been added to your list of GCS export destination connections.

Note

The status of the destination connection will initially be "Configured", but you can continue to export data. Once the first successful export has been processed, the status changes to "Complete".

Provision a Destination Connection to the Clean Room

Once you've created a destination connection, you can provision that destination connection to the clean room you want to export results from:

Note

In most situations, only the clean room owner can provision export destinations using this method. To give clean room partners the ability to provision destinations that the owner has created, contact your LiveRamp representative.

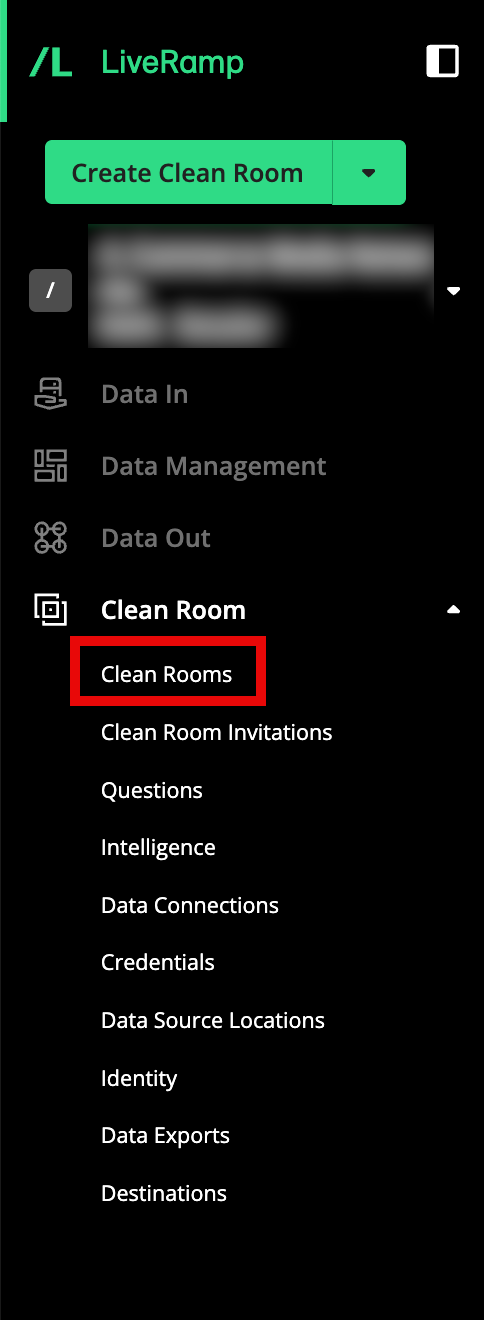

From the navigation menu, select Clean Room → Clean Rooms to open the Clean Rooms page.

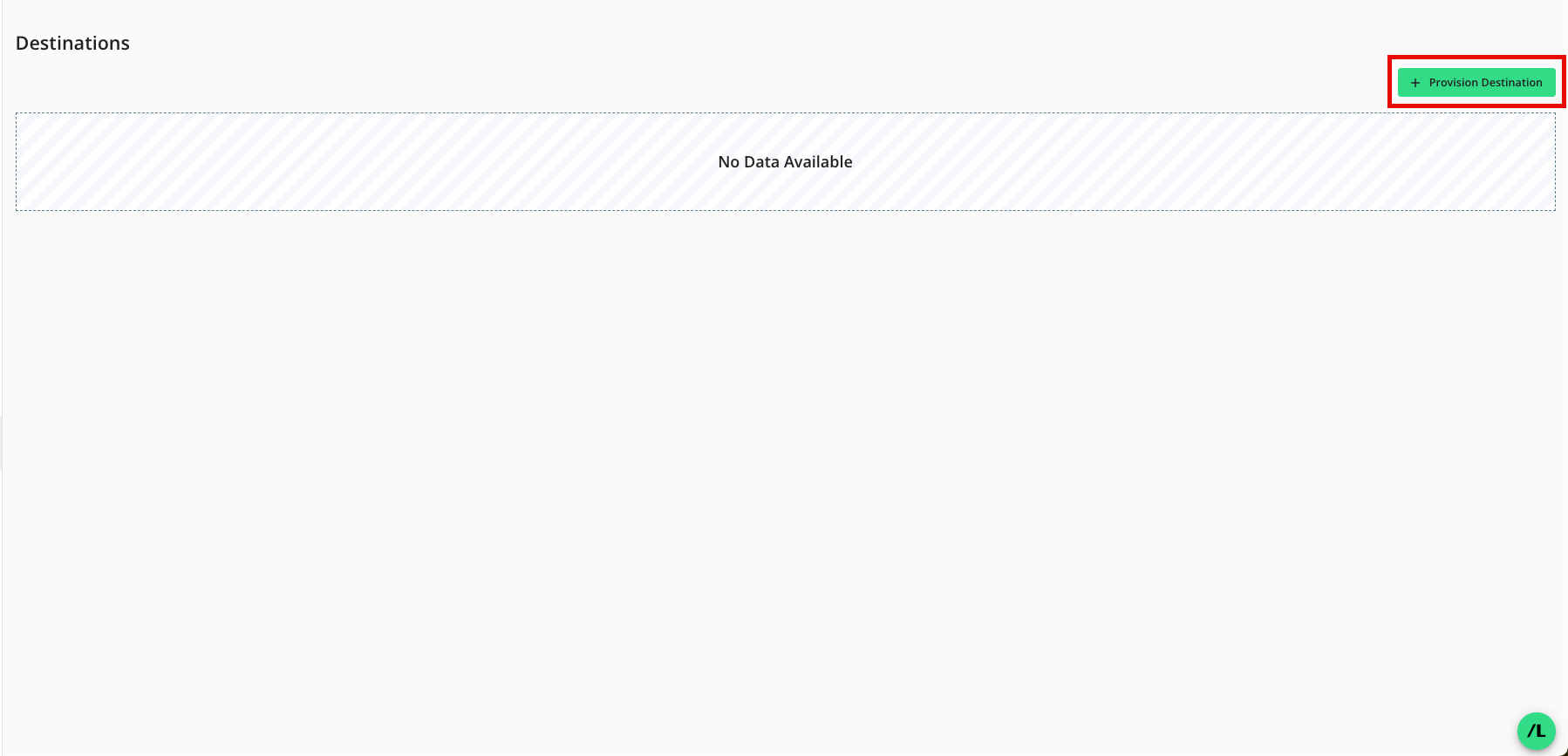

From the Clean Room navigation pane, select Destinations. The Destinations screen shows all destination connections provisioned to the clean room.

Click .

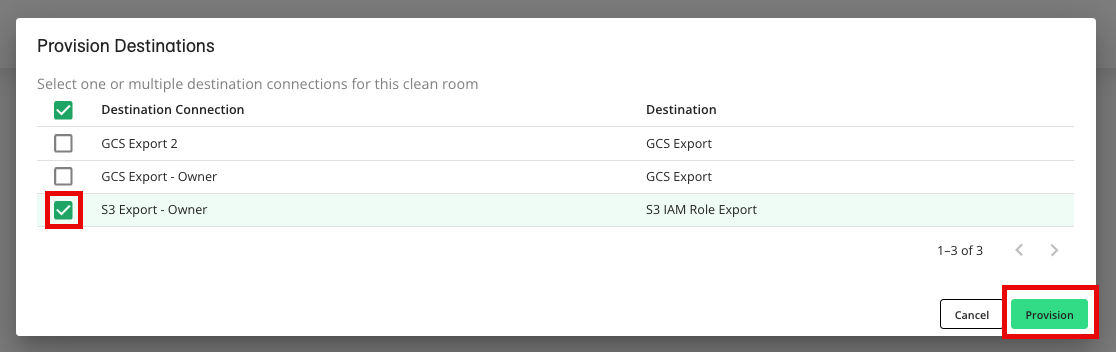

Check the check box for the desired destination connection and then click (AWS S3 example shown).

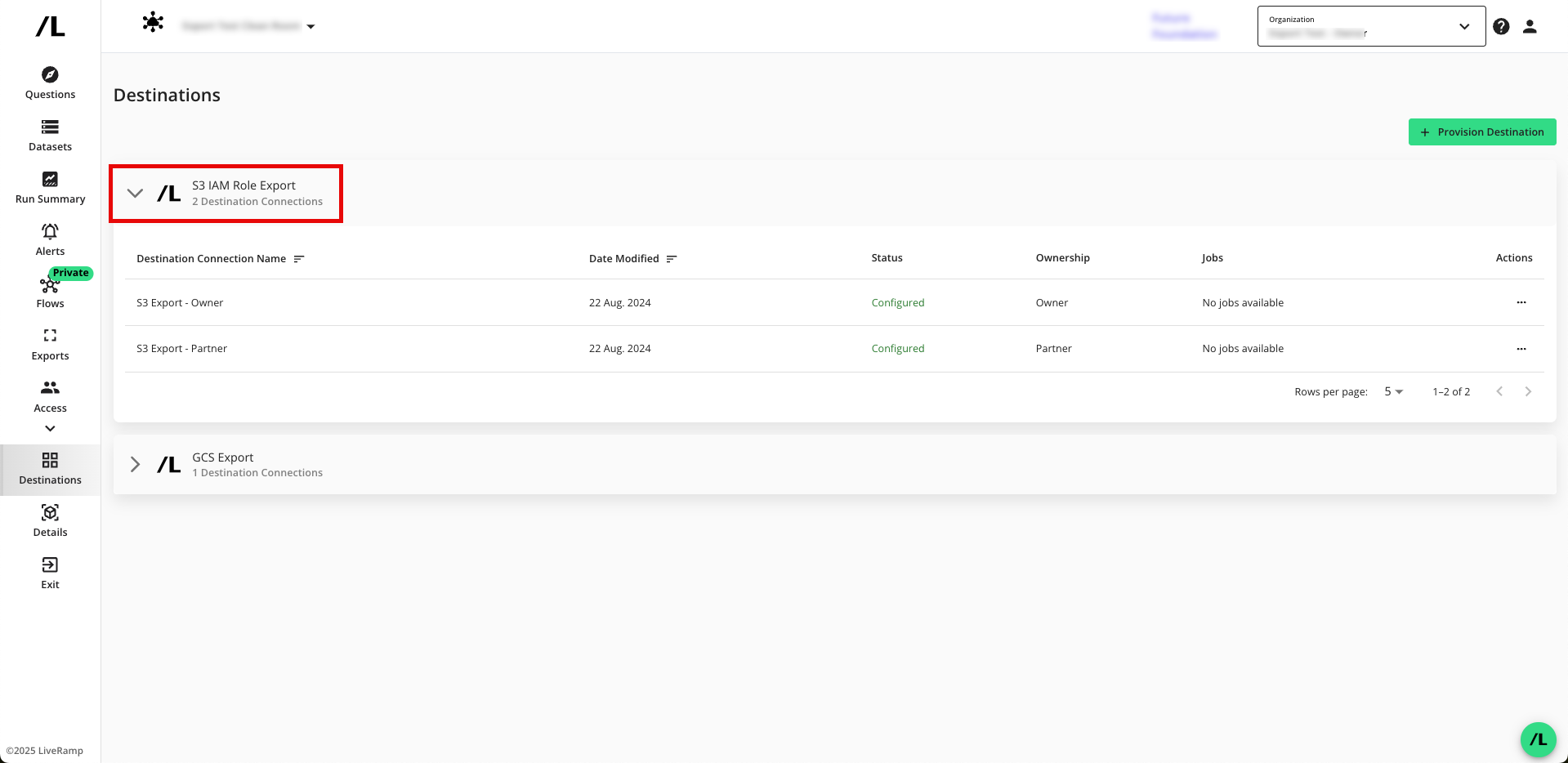

Verify that your destination connection has been added (S3 IAM example shown).

Note

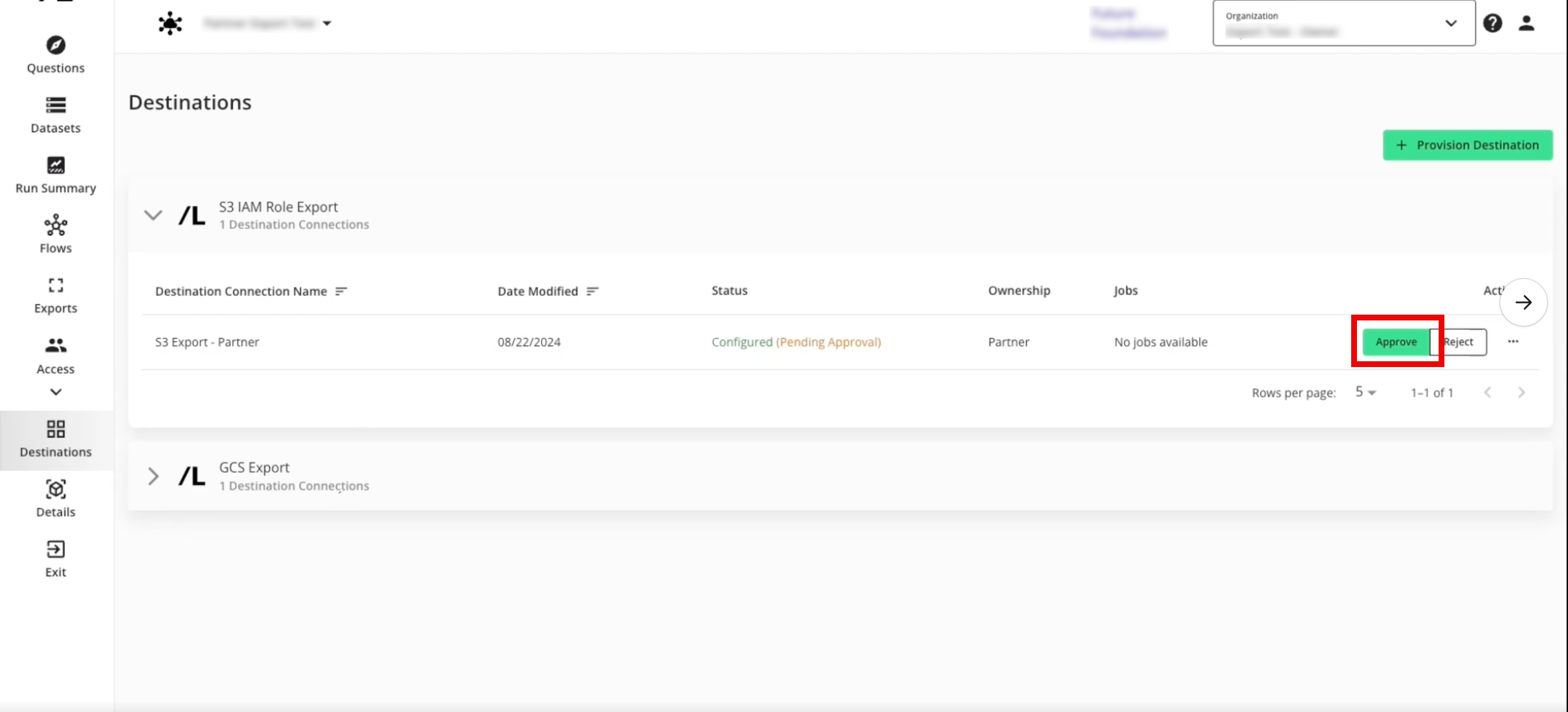

If you're a clean room partner who has been given the ability to request the provisioning of export destinations, the status will show as "Configured (Pending Approval)" until the clean room owner has approved the provisioning of the destination. You can still set up your exports, but the data will not be exported until the request has been approved.

Approve a Destination Provisioning Request

If you're a clean room owner, you're clean room partners can provision export destinations in the clean room, but the status will show as "Configured (Pending Approval)" until you have approved the provisioning of the destination.

To approve the provisioning request:

From the navigation menu, select Clean Room → Clean Rooms to open the Clean Rooms page.

From the Clean Room navigation pane, select Destinations. The Destinations screen shows all destination connections provisioned to the clean room.

Click the caret next to the destination type to view the list of destination connections for that destination type.

For the destinations that show the status as "Configured (Pending Approval)", click .

The destination status changes to "Configured".

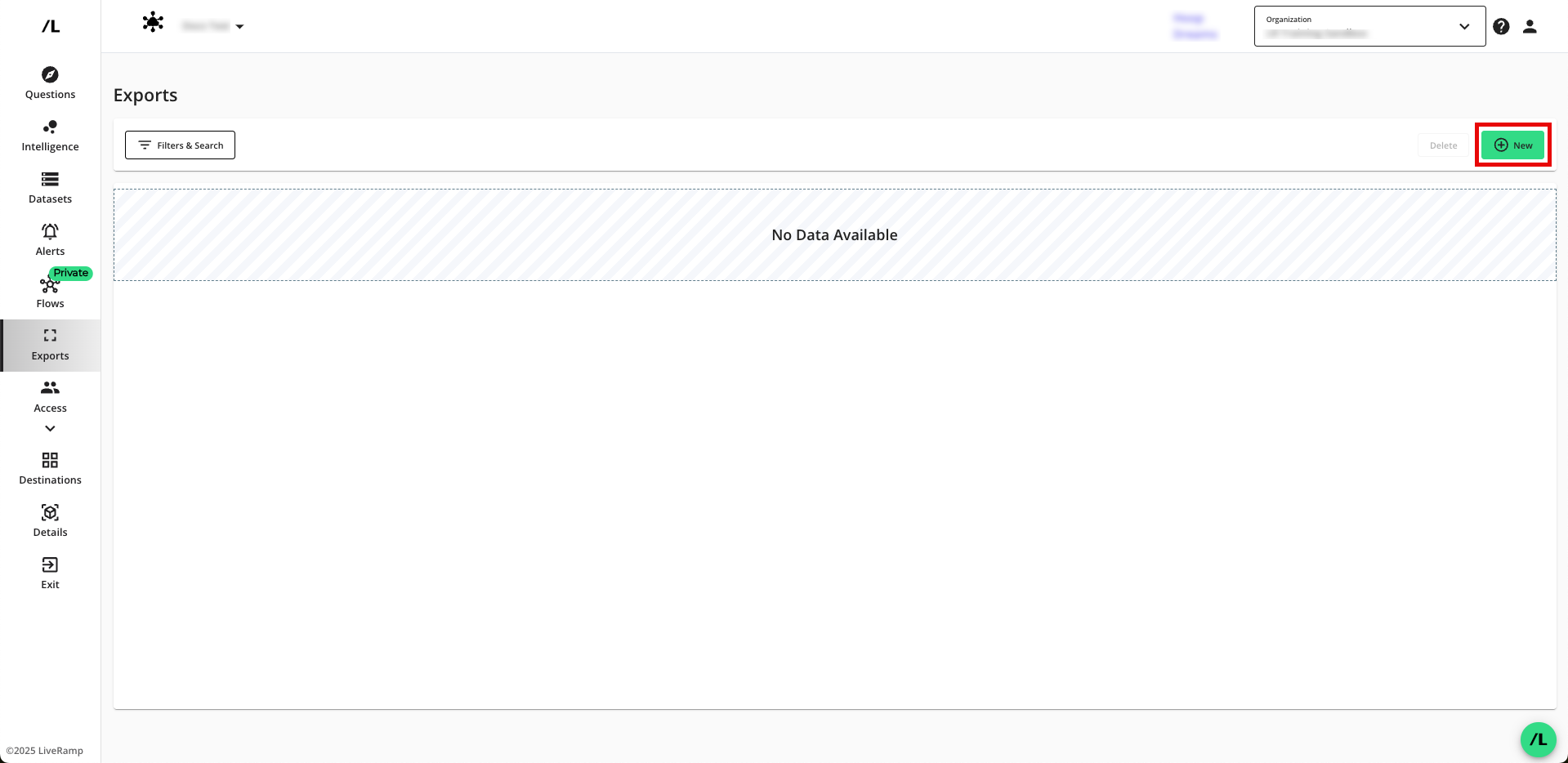

Create a New Export

After you've provisioned the destination connection to the clean room, create a new export:

From the LiveRamp Clean Room navigation pane, select Clean Rooms → Clean Rooms.

From the tile for the desired clean room, click .

From the Clean Room navigation pane, select Exports.

Click to open the wizard to create a new export.

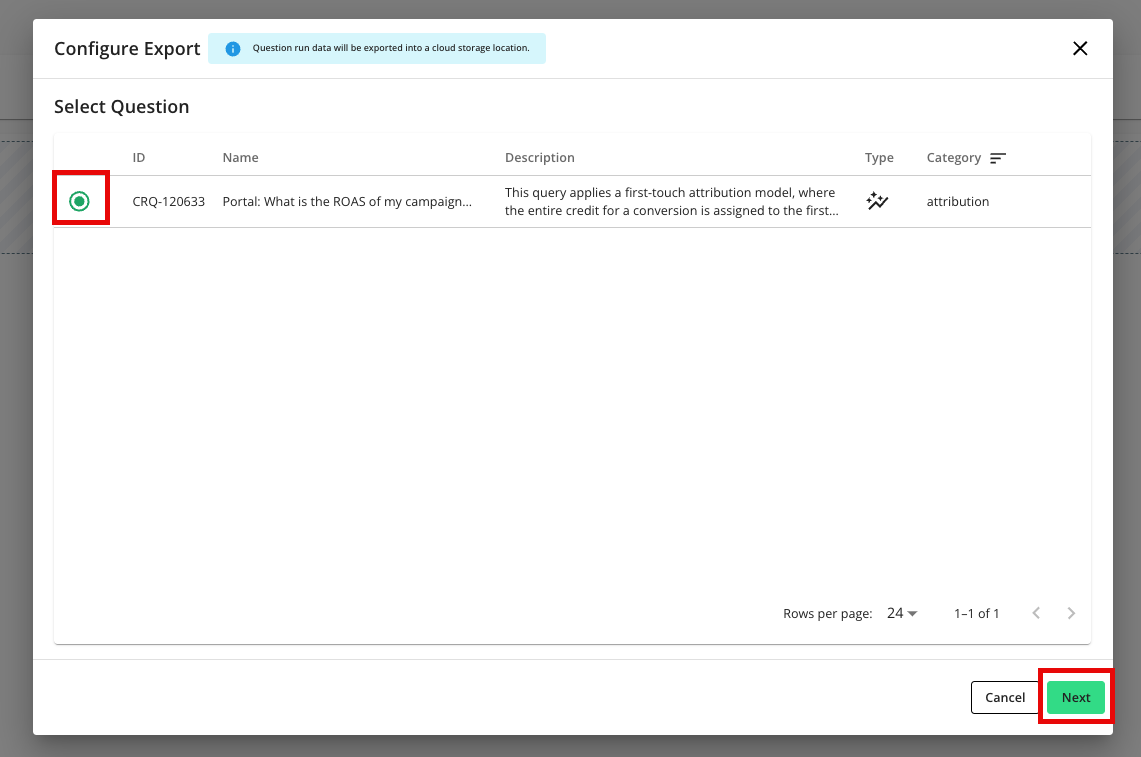

Select the question that you want to export outputs for and then click .

Select the specific BigQuery Export destination connection you want to send run outputs to.

Enter the dataset name and table name for the table runs should be added to and then click .

Verify that the job has been created. Exports are added to the page. You may view the details of an export by clicking on the name.

Note

Exports can be paused, which will stop them from sending data upon the completion of each run.

Exports cannot be edited or deleted. Changes should be made by pausing the export and creating a new export.

Export Details

When a question runs, the results will be written to the defined table. Each row will have an associated "Run ID" column. There will be a second metadata table created.