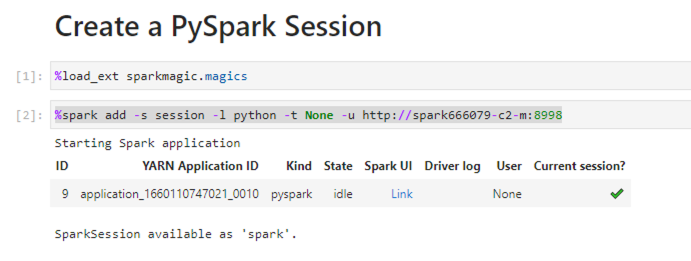

Create a PySpark Session

When you're working in Jupyter, a PySpark session connects your Jupyter Notebook to the Dataproc cluster so that you can access your Analytics Environment data.

Dataproc manages multiple PySpark sessions through Sparkmagic and Apache Livy. Dataproc has access to tenant data with the same permissions as the Data Scientist persona.

Jupyter creates a remote PySpark session on your account's Dataproc cluster. However, Jupyter does not have direct access to your Analytics Environment data, only Dataproc does. You can use Sparkmagic and Apache Livy to build a connection from JupyterHub to your Dataproc cluster.

Click an existing PySpark notebook to open it and create a remote PySpark session.

If you don't have an existing PySpark notebook, see "Create a PySpark Notebook."

Enter

%load_ext sparkmagic.magicsin the cell and run it to load the Sparkmagic library.In the "magic" cell, enter the name of the session and the URL of the Spark server using the following format and then run it:

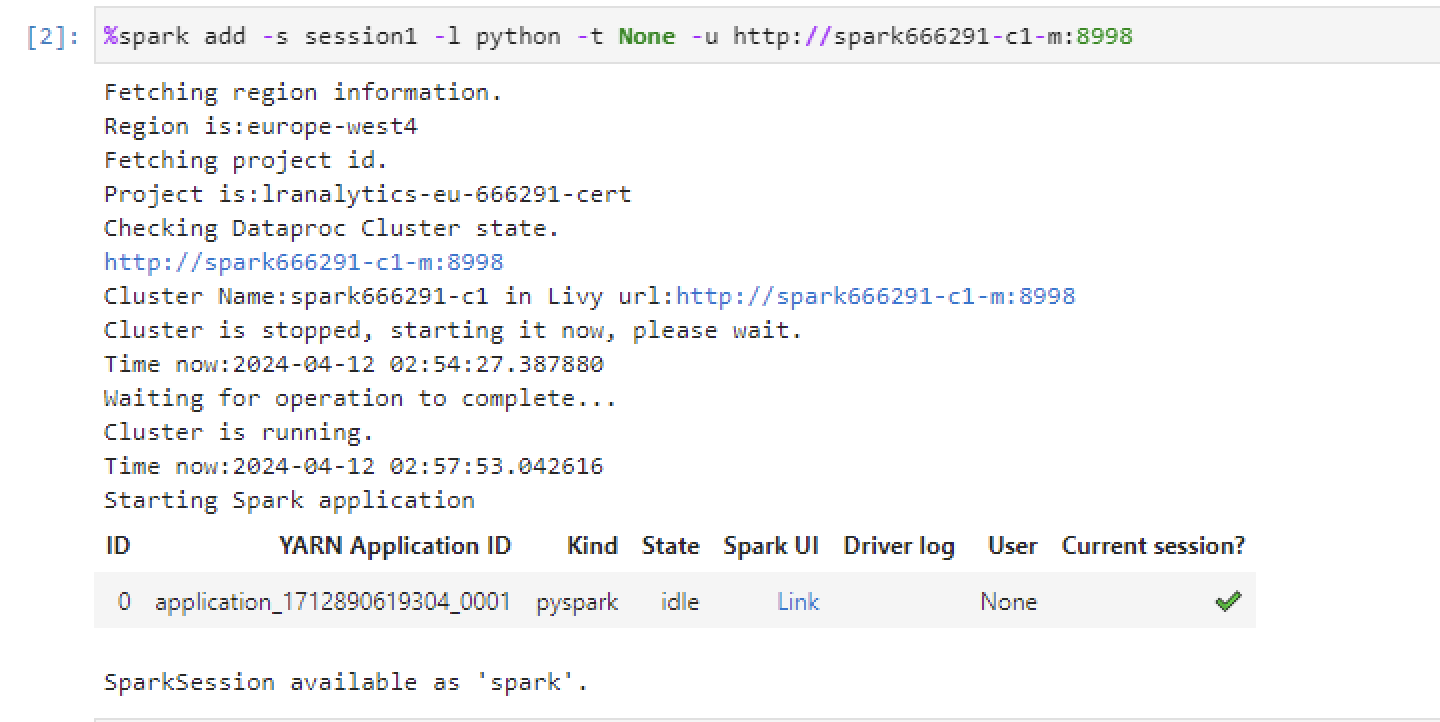

%spark add -s {session_name} -l python -t None -u http://spark{tenant_ID}-c{num_server}-m:8998Where: {tenant_ID} is your tenant ID (such as 8919) and {num_server} is the number of the server. For example:

Note

If your cluster stops due to inactivity, it will restart automatically once you initiate a session. You can view its status in the Jupyter output box.

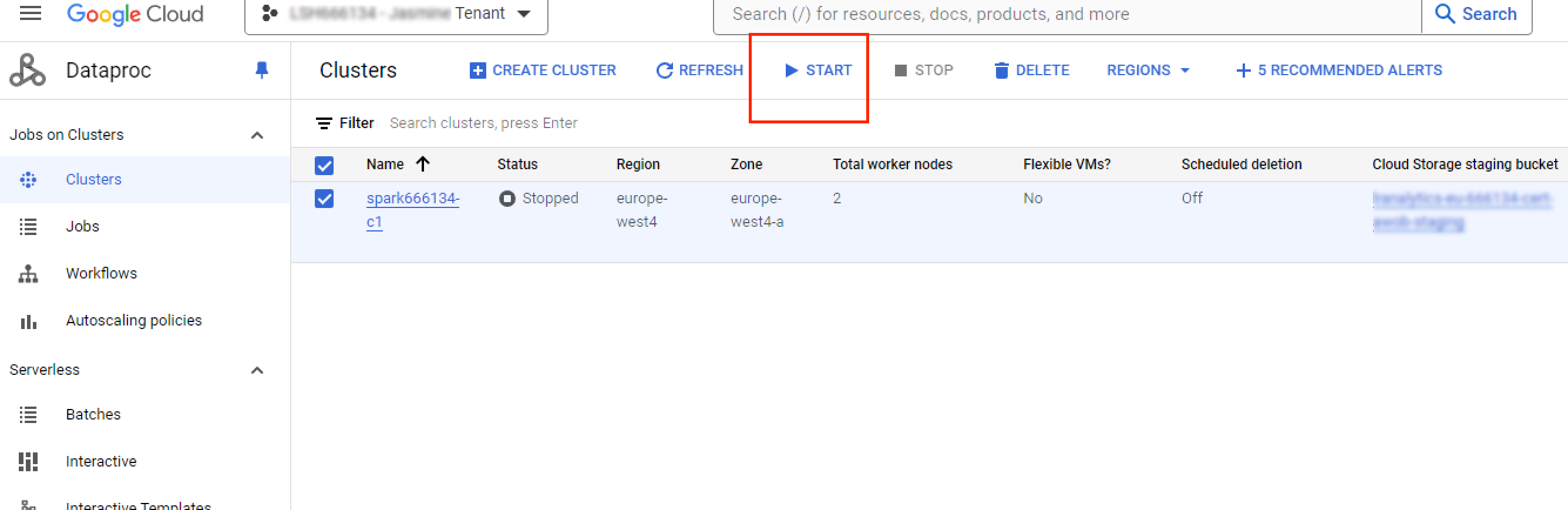

Start a Dataproc Cluster

If your Dataproc cluster has stopped, you can start it by clicking in the Dataproc Console.

Configuring Email Alerts

When you create a PySpark session and run it via Jupyter Notebook or JupyterLab, you can request an alert via email if you expect that the job will run for a long time and do not want to wait. The length of the job depends on its complexity, the data volume, and so on.

To request email alerts for your PySpark session when you run it via Jupyter Notebook or JupyterLab, set the following environment variables to True before your PySpark code:

%env ENABLE_FAILED_NOTIFICATION=True %env ENABLE_COMPLETED_NOTIFICATION=True

By default, these variables are set to False.

Note

You do not need to set these variables if you submit jobs to Dataproc clusters.

For most jobs, you can use the Jupyter Notebook or JupyterLab UI and view notifications directly in the UI.