Extracting Data from BigQuery to GCS

Users with a Data Scientist persona can extract data from their _pub dataset in BigQuery and export it to a Google Cloud Storage (GCS) bucket. They will receive notifications via email to know when an extraction succeeds or fails.

To enable this feature for your organization, create an case in the LiveRamp Community portal or contact your LiveRamp representative. LiveRamp will implement data extraction rules for your organization. Specific users will be granted permission to extract data and no other users will have access to it.

Tip

Users with a Data Scientist persona can alternatively publish a report to the _rp dataset. For information, see "Build a Report in Tableau Server".

Extract Data from Your _pub Dataset to a GCS Bucket

Guidelines:

You can extract a minimum of 100 records and a maximum of 1000.

Your organization can perform up to a certain number of extractions. If exceeded, the notification message will indicate the maximum number.

The unique value across columns must be greater than 25.

Some organizations can only extract tables, not views. If you also need to extract views, contact your LiveRamp representative.

Data cannot be extracted from tables and views if they contain certain keywords or patterns, such as IDL, IdentityLink, Device_ID, and so on. If restricted keywords or patterns are included, the notification message will indicate what is not allowed.

Tables and views must not contain record or struct fields.

From BigQuery, create a table in the "_pub" (Publication) dataset.

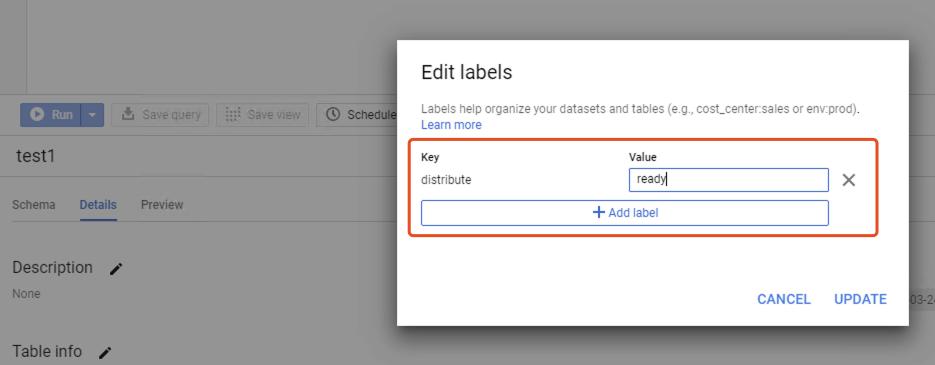

From the "_pub" dataset, select the desired table and add a label with the name "distribute" and a value of "ready".

If you want to specify the frequency a table should be extracted from the _pub dataset to your GCS bucket, add another label with a label name of "frequency" and a value of "daily", "weekly", "monthly", or "quarterly". If a frequency is set, another label will be added showing the next refresh date once the most recent extraction is performed. For example:

nextrefresh:.datetime

Once the extraction is complete, it will be available in the extraction folder of the GCS bucket that was configured for your organization's data extraction, such as gs://bucket_name/extraction/